Instagram Photos Are Being Labeled ‘Made With AI’ When They’re Not

Instagram’s newly-launched “Made with AI” labels are creating confusion as photographers and content creators are finding their posts slapped with the tags despite using minimal editing or apparently not using AI at all.

Photographer Peter Yan took to Threads to ask Instagram head Adam Mosseri why his image of Mount Fuji had been given a “Made with AI” tag when it was a real photo he took.

Post by @yantastic

View on Threads

“I did not use generative AI, only Photoshop to clean up some spots. This ‘Made with AI’ was auto-labeled by Instagram when I posted it, I did not select this option,” he wrote.

But Yan’s photo was labeled as AI because he had used a generative AI tool to remove a trash bin in the photo. Removing unwanted objects and spots is par for the course for photographers so to have the entire image labeled as AI seems unfair.

Mosseri did answer Yan asking “Did this label get added automatically?” As of publication, Yan’s photo of Mount Fuji no longer has the “Made with AI” marker on it.

But in another Instagram post, professional inline skater Julien Cadot had his video marked as AI. When asked by PetaPixel why, the French skater said he didn’t know.

The AI labels only appear on the mobile app and not on desktop.

Further Testing

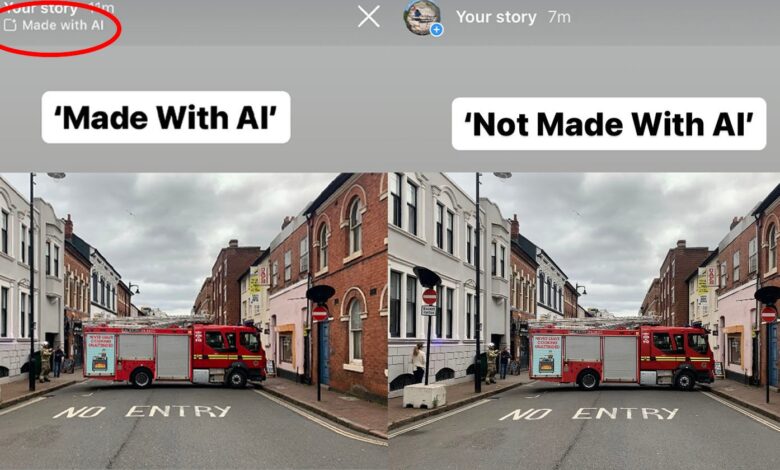

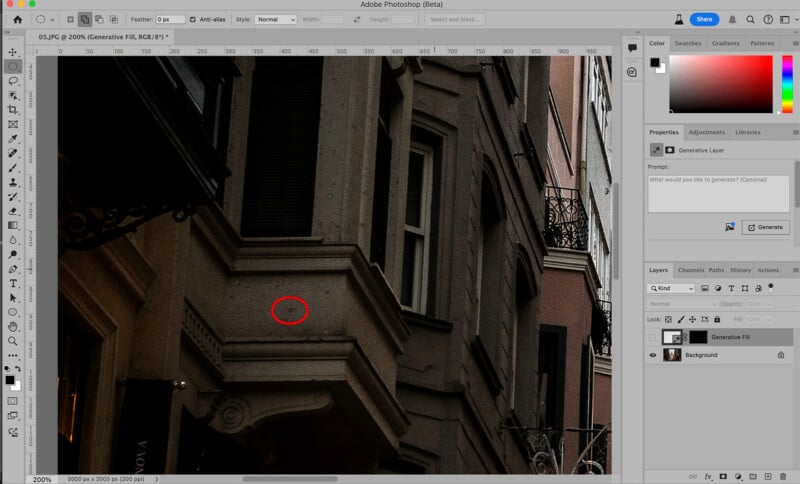

Yan’s acknowledgment that he used a generative AI tool prompted PetaPixel to open a file in Photoshop and use the Generative Fill tool to remove a tiny speck from the photo and then upload it to Instagram.

Lo and behold, despite the photograph having just a minuscule amount of AI editing, Instagram slapped the photograph with a “Made with AI” tag.

When PetaPixel used the Spot Healing Brush Tool, Content-Aware Fill, or the Clone Stamp Tool, Instagram didn’t slap the AI sticker on it — despite these tools having exactly the same effect on the overall image as the Generative Fill tool.

But there is a workaround for photographers who want to use the Generative Fill tool to make minor adjustments but don’t want “Made with AI” attached to their image: When PetaPixel loaded the file that has been edited using Generative Fill back into Photoshop, copy and pasted it onto a black document before saving it again, Instagram did not flag that image as AI.

How Does Instagram Know That AI Was Used?

Meta has not revealed much detail on how it detects AI content, saying only that it is “based on our detection of industry-shared signals of AI images or people self-disclosing that they’re uploading AI-generated content.”

Meta is not a member of C2PA Content Credentials — a digital watermark system that allows people to check the provenance of imagery. But might be picking up on some of the standard’s signals to mark pieces of content with AI. Adobe (which makes Photoshop) is very much part of Content Credentials as it actually co-founded the set of standards.

When uploading an image that has been created with Meta’s generative AI tools, the tag “Imagined with AI” is automatically added to the content.

PetaPixel reached out to Meta for comment which did not respond as of publication. This article will be updated if and when it responds.