Is artificial intelligence combat ready?

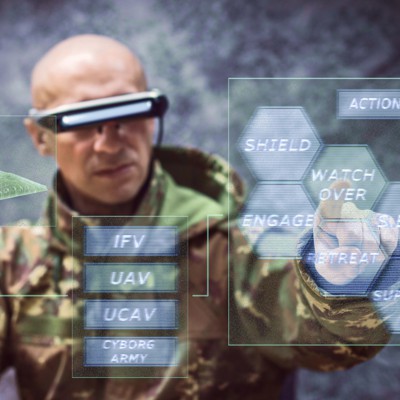

Human soldiers will increasingly share the battlespace with a range of robotic, autonomous, and artificial intelligence-enabled agents. Machine intelligence has the potential to be a decisive factor in future conflicts that the U.S. may face.

The pace of change will be faster than anything seen in many decades, driven by the advances in commercial AI technology and the pressure of a near-peer with formidable technological capabilities.

But are AI and machine learning combat-ready? Or, more precisely, is our military prepared to incorporate machine intelligence into combat effectively?

Creating an AI-Ready Force

The stakes of effective collaboration between AI and combatants are profound.

Human-machine teaming has the potential to reduce casualties dramatically by substituting robots and autonomous drones for human beings in the highest-risk front-line deployments.

It can dramatically enhance situational awareness by rapidly synthesizing data streams across multiple domains to generate a unified view of the battlespace. And it can overwhelm enemy defenses with the swarming of autonomous drones.

In our work with several of the Defense Department research labs working at the cutting edge of incorporating AI and machine learning into combat environments, we have seen that this technology has the potential to be a force multiplier on par with air power.

However, several technological and institutional obstacles must be overcome before AI agents can be widely deployed into combat environments.

Safety and Reliability

The most frequent concern about AI agents and uncrewed systems is whether they can be trusted to take actions with potentially lethal consequences. AI agents have an undeniable speed advantage in processing massive amounts of data to recognize targets of interest. However, there is an inherent tension between conducting “war at machine speed” and retaining accountability for the use of lethal force.

It only takes one incident of AI weapons systems subjecting their human counterparts to “friendly fire” to undermine the confidence of warfighters in this technology. Effective human-machine teaming is only possible when machines have earned the trust of their human allies.

Adapting Military Doctrine to AI Combatants

Uncrewed systems are being rapidly developed that will augment existing forces across multiple domains. Many of these systems incorporate AI at the edge to control navigation, surveillance, targeting, and weapons systems.

However, existing military doctrine and tactics have been optimized for a primarily human force. There is a temptation to view AI-enabled weapons as a new tool to be incorporated into existing combat approaches. But doctrine will be transformed by innovations such as the swarming of hundreds or thousands of disposable, intelligent drones capable of overwhelming strategic platforms.

Force structures may need to be reconfigured on the fly to deliver drones where there is the greatest potential impact. Human-centric command and control concepts will need to be modified to accommodate machines and build warfighter trust.

As autonomous agents proliferate and become more powerful, the battlespace will become more expansive, more transparent, and move exponentially faster. The decision on how and if to incorporate AI into the operational kill chain has profound ethical consequences.

An even more significant challenge will be how to balance the pace of action on the AI-enabled battlefield with the limits of human cognition. What are the tradeoffs between ceding a first-strike advantage measured in milliseconds with the loss of human oversight? The outcome of future conflicts may hinge on such questions.

Insatiable Hunger for Data

AI systems are notoriously data-hungry. There is not, and fortunately never will be, enough live operational data from live military conflicts to adequately train AI models to the point where they could be deployed on the battlefield. For this reason, simulations are essential to develop and test AI agents, and they require thousands or even millions of iterations using modern machine learning techniques.

The DoD has existing high-fidelity simulations, such as Joint Semi-Automated Forces (JSAF), but they run essentially in real-time. To unlock the full potential of AI-enabled warfare requires developing simulations with sufficient fidelity to accurately model potential outcomes but compatible with the speed requirements of digital agents.

Integration and Training

AI-enabled mission planning has the potential to vastly expand the situational awareness of combatants and generate novel multi-domain operation alternatives to overwhelm the enemy. Just as importantly, AI can anticipate and evaluate thousands of courses of action that the enemy might employ and suggest countermeasures in real time.

One reason America’s military is so effective is a relentless focus on training. But warfighters are unlikely to embrace tactical directives emanating from an unfamiliar black box when their lives hang in the balance.

As autonomous platforms move from research labs to the field, intensive warfighter training will be essential to create a cohesive, unified human-machine team. To be effective, AI course-of-action agents must be designed to align with existing mission planning practices.

By integrating such AI agents with the training for mission planning, we can build confidence among users while refining the algorithms using the principles of warfighter-centric design.

Making Human-Machine Teaming a Reality

While underlying AI technology has grown exponentially more powerful in the past few years, addressing the challenges posed by human-machine teaming will determine how rapidly these technologies can translate into practical military advantage.

From the level of the squad all the way to the joint command, it is essential that we test the limits of this technology and establish the confidence of decision-makers in its capabilities.

There are several vital initiatives the DoD should consider to accelerate this process.

Embrace the Chaos of War

Building trust in AI agents is the most essential step to effective human-machine teaming. Warfighters will rightly have a low level of confidence in systems that have only been tested under controlled laboratory conditions. The best experiments and training exercises replicate the chaos of war, including unpredictable events, jamming of communications and positioning systems, and mid-course changes to the course of action.

Human warfighters should be encouraged to push autonomous systems and AI agents to the breaking point to see how they perform under adverse conditions. This will result in iterative design improvements and build the confidence that these agents can contribute to mission success.

A tremendous strength of the U.S. military is the flexible command structure that empowers warfighters down to the squad level to rapidly adapt to changing conditions on the ground. AI systems have the potential to provide these units with a far more comprehensive view of the battlespace and generate tactical alternatives. But to be effective in wartime conditions, AI agents must be resilient enough to function under conditions of degraded communications and understand the overall intent of the mission.

Apply AI to Defense Acquisition Process

The rapid evolution of underlying AI and autonomous technologies means that traditional procurement processes developed for large cold-war platforms are doomed to fail. As an example, swarming tactics are only effective when using hundreds or thousands of individual systems capable of intelligent, coordinated action in a dynamic battlespace.

Acquiring such devices at scale will require leveraging a broad supplier base, moving rapidly down the cost curve, and enabling frequent open standards updates. Too often, we have seen weapons vendors using incompatible, proprietary communications standards that render systems unable to share data, much less engage in coordinated, intelligent maneuvers. One solution is to apply AI to revolutionize the acquisition process.

By creating a virtual environment to test systems designs, DoD customers can verify operational concepts and interoperability before a single device is acquired. This will help to reduce waste, promote shared knowledge across the services, and create a more level playing field for the supplier base.

Build Bridges from Labs to Deployment

While a tremendous amount of important work has been done by organizations such as the Navy Research Lab, the Army Research Lab, the Air Force Research Lab, and DARPA, the success of AI-enabled warfare will ultimately be determined by moving this technology from the laboratories and out into the commands. Human-machine teaming will be critical to the success of these efforts.

Just as important, the teaching of military doctrine at the service academies needs to be continuously updated as the technology frontier advances. Incorporating intelligent agents into practical military missions requires both profound changes in doctrine and reallocation of resources.

Military commanders are unlikely to be dazzled by “bright and shiny objects” unless they see tangible benefits to deploying them. By starting with some easy wins, such as the enhancement of ISR capabilities and automation of logistics and maintenance, we can build early bridges that will instill confidence in the value of AI agents and autonomous systems.

Educating commands about the potential of human-machine teaming to enhance mission performance and then developing roadmaps to the highest potential applications will be essential. Commanders need to be comfortable with the parameters of “human-in-the-loop” and “human-on-the-loop” systems as they navigate how much autonomy to grant to AI-at-the-edge weapons systems. Retaining auditability as decision cycles accelerate will be critical to ensuring effective oversight of system development and evolving doctrine.

Summary

Rapid developments in AI and autonomous weapons systems have simultaneously accelerated and destabilized the ongoing quest for military superiority and effective deterrence. The United States has responded to this threat with a range of policies restricting the transfer of underlying technologies. However, the outcome of this competition will depend on the ability to convincingly transfer AI-enabled warfare from research labs to potential theaters of conflict.

Effective human-machine teaming will be critical to make the transition to a “joint force” that leverages the best capabilities of human warfighters and AI to ensure domination of the battlespace and deter adventurism by foreign actors.

Mike Colony leads Serco’s Machine Learning Group, which has helped to support several Department of Defense clients in the area of AI and machine learning, including the Office of Naval Research, the Air Force Research Laboratory, the U.S. Marine Corps, the Electronic Warfare and Countermeasures Office, and others.