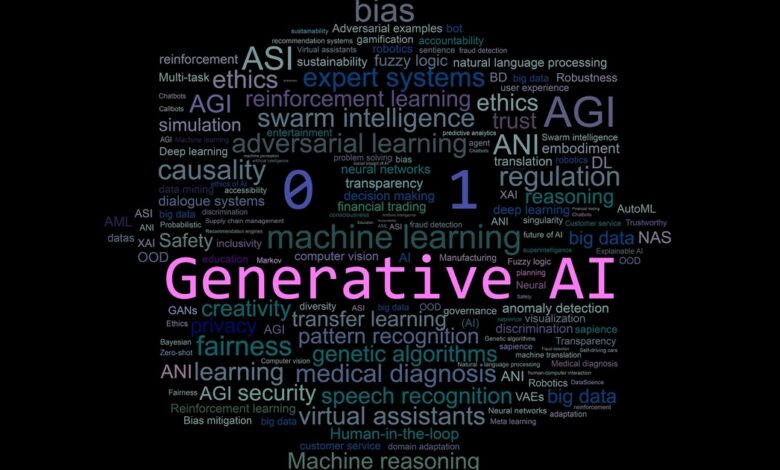

Looking to Leverage Generative AI? Prep for Success With These 4 Tips

Generative artificial intelligence (GenAI) has taken the world by storm, with more than 55% of organizations piloting or actively using the technology. Yet, despite this overwhelming interest in the transformative potential of GenAI, security teams still have a responsibility to ensure that any new tool or platform is implemented securely and rigorously protected in accordance with industry and company best practices.

A recent poll of business and cybersecurity leaders, conducted by Information Security Media Group (ISMG), found five common concerns around GenAI implementation:

-

Data security/leakage of sensitive data

So what steps can security teams take to make sure that their organizations are prepared to safely implement GenAI?

As with any new technology, foundational cybersecurity best practices are one of the best places to start when implementing a new tool or platform into your environment. Referencing widely used security standards can help CISOs incorporate broader trustworthiness considerations into the design, development, deployment, and use of AI systems in their own environments. Here are four steps to prepare your organization for GenAI.

Implement a Zero-Trust Security Model

Zero trust is the cornerstone of any cyber resilience plan, limiting the impact of an incident on the organization. Instead of assuming everything behind the corporate firewall is safe, the zero-trust model assumes a breach and verifies each request as though it originates from an open network. Applying a familiar security framework like zero trust can help organizations better manage and govern any GenAI tools operating in their environments.

Adopt Cyber Hygiene Standards

Basic security hygiene still protects against 99% of attacks. Meeting the minimum standards for cyber hygiene is essential for protecting against cyber threats, minimizing risk, and ensuring the ongoing viability of the business. For organizations that are concerned about privacy or potential AI misuse or fraud, strong cyber hygiene standards can help protect against some of the most common threats.

Establish a Data Security and Protection Plan

Understanding that data security is one of the chief concerns for GenAI implementation, security teams should also work to establish a comprehensive data security and protection plan before implementing any new GenAI technology. A defense-in-depth approach is one of the best protection strategies available when it comes to fortifying data security. We also recommend enforcing data labeling to ensure sensitive data is appropriately tagged and implementing stringent data permissions to reduce unauthorized access to sensitive information.

Define an AI Governance Structure

Finally, before your organization can fully activate your chosen GenAI solution, you must have an AI governance structure in place. AI-ready organizations will have implemented processes, controls, and accountability frameworks that govern data privacy, security, and development of their AI systems, including the implementation of responsible AI standards.

Ultimately, GenAI has the potential to transform security as we know it. We’re already seeing AI solutions being used to close the current cybersecurity talent gap by enabling current defenders to be more productive with prioritized alerts and automated remediation guidance. However, that potential hinges on our ability to securely implement, manage, and govern GenAI so that we can create a safer, more resilient cyber landscape for generations to come.

— Read more Partner Perspectives from Microsoft Security