Meta AI chief says large language models will not reach human intelligence

Meta’s artificial intelligence chief said the large language models that power generative AI products such as ChatGPT would never achieve the ability to reason and plan like humans, as he focused instead on a radical alternative approach to create “superintelligence” in machines.

Yann LeCun, chief AI scientist at the social media giant that owns Facebook and Instagram, said LLMs had “very limited understanding of logic . . . do not understand the physical world, do not have persistent memory, cannot reason in any reasonable definition of the term and cannot plan . . . hierarchically”.

In an interview with the Financial Times, he argued against relying on advancing LLMs in the quest to make human-level intelligence, as these models can only answer prompts accurately if they have been fed the right training data and are, therefore, “intrinsically unsafe”.

Instead, he is working to develop an entirely new generation of AI systems that he hopes will power machines with human-level intelligence, although he said this vision could take 10 years to achieve.

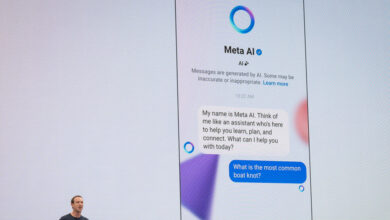

Meta has been pouring billions of dollars into developing its own LLMs as generative AI has exploded, aiming to catch up with rival tech groups, including Microsoft-backed OpenAI and Alphabet’s Google.

LeCun runs a team of about 500 staff at Meta’s Fundamental AI Research (Fair) lab. They are working towards creating AI that can develop common sense and learn how the world works in similar ways to humans, in an approach known as “world modelling”.

The Meta AI chief’s experimental vision is a potentially risky and costly gamble for the social media group at a time when investors are itching to see quick returns on AI investments.

Last month, Meta lost nearly $200bn in value when chief executive Mark Zuckerberg vowed to increase spending and turn the social media group into “the leading AI company in the world”, spooking Wall Street investors concerned about rising costs with little immediate revenue potential.

“We are at the point where we think we are on the cusp of maybe the next generation AI systems,” LeCun said.

LeCun’s comments come as Meta and its rivals push forward with ever more enhanced LLMs. Figures such as OpenAI chief Sam Altman believe they provide a vital step towards creating artificial general intelligence (AGI) — the point when machines have greater cognitive capabilities than humans.

OpenAI last week released its new faster GPT-4o model, and Google unveiled a new “multimodal” artificial intelligence agent that can answer real-time queries across video, audio and text called Project Astra, powered by an upgraded version of its Gemini model.

Meta also launched its new Llama 3 model last month. The company’s global affairs head Sir Nick Clegg said its latest LLM had “vastly improved capabilities like reasoning” — the ability to apply logic to queries. For example, the system would surmise that a person suffering from a headache, sore throat and runny nose had a cold, but could also recognise that allergies might be causing the symptoms.

However, LeCun said this evolution of LLMs was superficial and limited, with the models learning only when human engineers intervene to train it on that information, rather than AI coming to a conclusion organically like people.

“It certainly appears to most people as reasoning — but mostly it’s exploiting accumulated knowledge from lots of training data,” LeCun said, but added: “[LLMs] are very useful despite their limitations.”

Google DeepMind has also spent several years pursuing alternative methods to building AGI, including methods such as reinforcement learning, where AI agents learn from their surroundings in a game-like virtual environment.

At an event in London on Tuesday, DeepMind’s chief Sir Demis Hassabis said what was missing from language models was “they didn’t understand the spatial context you’re in . . . so that limits their usefulness in the end”.

Meta set up its Fair lab in 2013 to pioneer AI research, hiring leading academics in the space.

However, in early 2023, Meta created a new GenAI team, headed by chief product officer Chris Cox. It poached many AI researchers and engineers from Fair, and led the work on Llama 3 and integrated it into products, such as its new AI assistants and image-generation tools.

The creation of the GenAI team came as some insiders argued that an academic culture within the Fair lab was partly to blame for Meta’s late arrival to the generative AI boom. Zuckerberg has pushed for more commercial applications of AI under pressure from investors.

However, LeCun has remained one of Zuckerberg’s core advisers, according to people close to the company, due to his record and reputation as one of the founding fathers of AI, winning a Turing Award for his work on neural networks.

“We’ve refocused Fair towards the longer-term goal of human-level AI, essentially because GenAI now is focused on the stuff that we have a clear path towards,” LeCun said.

“[Achieving AGI] not a product design problem, it’s not even a technology development problem, it’s very much a scientific problem,” he added.

LeCun first published a paper on his world modelling vision in 2022 and Meta has since released two research models based on the approach.

Today, he said Fair was testing different ideas to achieve human-level intelligence because “there’s a lot of uncertainty and exploration in this, [so] we can’t tell which one will succeed or end up being picked up”.

Among these, LeCun’s team is feeding systems with hours of video and deliberately leaving out frames, then getting the AI to predict what will happen next. This is to mimic how children learn from passively observing the world around them.

He also said Fair was exploring building “a universal text encoding system” that would allow a system to process abstract representations of knowledge in text, which can then be applied to video and audio.

Some experts are doubtful of whether LeCun’s vision is viable.

Aron Culotta, associate professor of computer science at Tulane University, said common sense had long been “a thorn in the side of AI”, and that it was challenging to teach models causality, leaving them “susceptible to these unexpected failures”.

One former Meta AI employee described the world modelling push as “vague fluff”, adding: “It feels like a lot of flag planting.”

Another current employee said Fair had yet to prove itself as a true rival to research groups such as DeepMind.

In the longer term, LeCun believes the technology will power AI agents that users can interact with through wearable technology, including augmented reality or “smart” glasses, and electromyography (EMG) “bracelets”.

“[For AI agents] to be really useful, they need to have something akin to human-level intelligence,” he said.

Additional reporting by Madhumita Murgia in London