Meta Unveils Llama 3 Generative AI Model, Claims It As ‘Most Capable’ Open LLM Around

Meta has revealed the Llama 3 generative AI model, the latest iteration of the Llama large language model (LLM) portfolio. Llama 3 is available in two sizes: 8 billion parameters and 70 billion parameters, and Meta claims they are equal to or better than any other open generative AI model of comparable sizes.

Llama 3

The Llama 3 models showcase significant improvements over Llama 2. Meta points to better pretraining and fine-tuning protocols that have lowered false refusal rates and improved the diversity and alignment of the model responses. The result is models that are better at logic and code generation. Meta relied on a broad set of training data bolstered by a new, high-quality human evaluation set consisting of 1,800 prompts across 12 use cases to measure the model’s effectiveness.

“With Llama 3, we set out to build the best open models that are on par with the best proprietary models available today. We wanted to address developer feedback to increase the overall helpfulness of Llama 3 and are doing so while continuing to play a leading role on responsible use and deployment of LLMs,” Meta explained in a blog post. “The text-based models we are releasing today are the first in the Llama 3 collection of models. Our goal in the near future is to make Llama 3 multilingual and multimodal, have longer context, and continue to improve overall performance across core LLM capabilities such as reasoning and coding.”

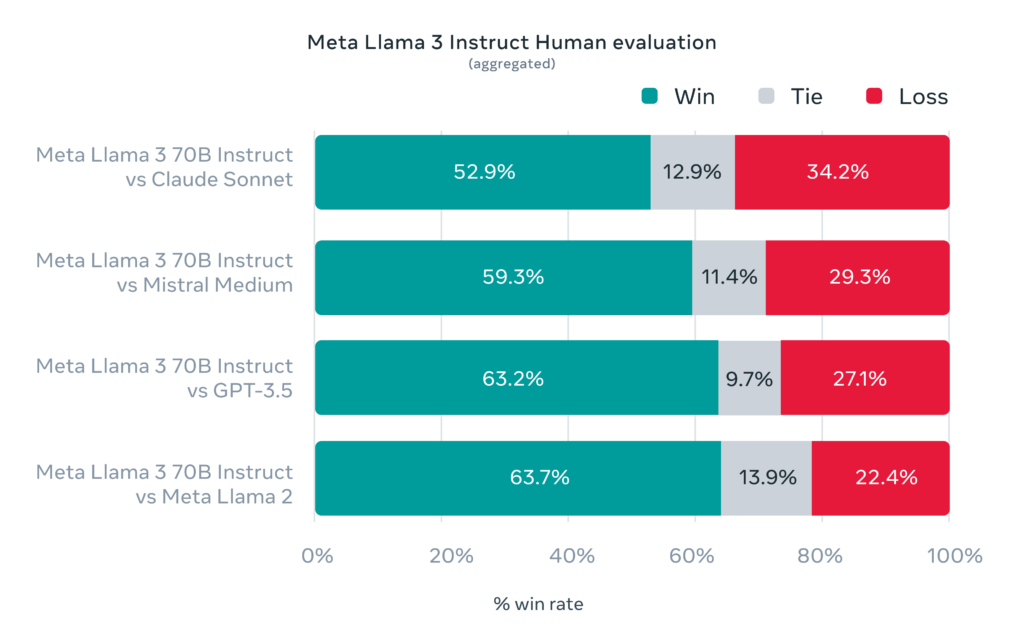

Meta’s claims about its new models seem backed by Llama 3’s scores on several AI benchmark tests. Llama 3 8B outperformed some rivals in the open generative AI model space, specifically Mistral’s Mistral 7B and Google’s Gemma 7B, on multiple tests. The larger Llama 3 70B model, meanwhile, seems a fair match in competition with some of the most advanced closed models like Google’s Gemini 1.5 Pro, even beating some of Anthropic’s Claude 3 models, though not the flagship Opus variation.

Qualitatively, Meta claims that Llama 3 models offer improved “steerability,” reduced refusals to answer questions, and heightened accuracy on trivia, history, STEM, and coding tasks. This enhanced performance is attributed to a massive 15 trillion token training dataset, seven times larger than Llama 2’s and encompassing over 30 languages, albeit with a focus on English outputs for the initial release.

Llama 3 is powering the Meta AI assistant on Facebook and other Meta platforms. But, Meta is keen to spread the model to third-party developers. That’s why Llama 3 continues Apache 2.0 licensing of the earlier models, with no license fee, though there are some preferred partner channels like Microsoft and AWS. Based on the benchmark tests, Meta is clearly hoping that businesses looking for open LLMs will rely on Llama 3 over Gemma, Mixtral, X.AI’s Grok 1.5, or Databricks’ DBRX.

Follow @voicebotaiFollow @erichschwartz

Meta’s New Code Llama 70B Rivals GitHub Copilot for Generative AI Programming Models

Meta Introduces Large Language Model LLaMA as a Competitor for OpenAI