NASA Looking to Use Purdue Robots on Moon, Mars

May 13, 2024

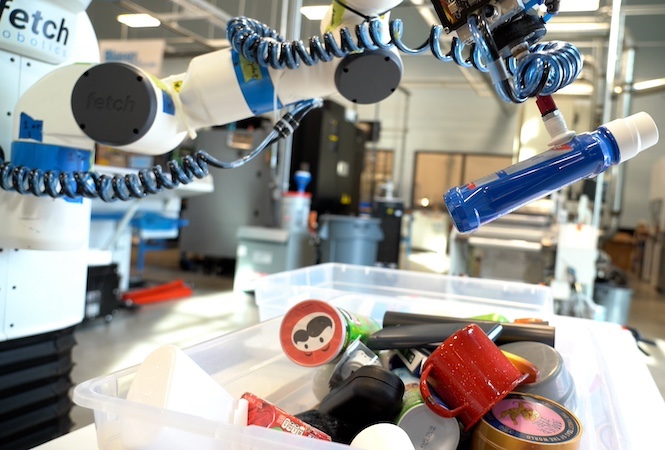

- Purdue University researchers have completed early testing of robots using three different “end effectors” that act as hands to uniquely manipulate items.

- With computer vision and onboard machine learning, the system can identify an object and its orientation, select the right grasping tool and then handle it.

As we humans expand our reach into space, robots may be doing a lot of the day-to-day work beyond earth’s orbit—tasks like turning screws and opening drawers. Extraterrestrial habitats are part of NASA’s roadmap toward the moon, Mars and beyond; and when these space stations are unmanned, robots will need to handle the maintenance.

With that in mind, a group of scientists are building a system to help robots identify the things around them and attach the tools they need to pick them up or manipulate them.

Researchers at Purdue University have been developing a machine-learning solution that can identify equipment such as a screwdriver, electrical panel or switch—and then manipulate each item with the proper tools.

The Purdue system uses a combination of computer vision, onboard machine learning and QR codes to help the robots conduct work, autonomously or with astronauts.

The autonomous grasping robots project is led by David Cappelleri, Purdue University’s mechanical engineering professor.

Enabling Robots on Extraterrestrial Habitats

NASA has been considering automated technologies to keep habitats operational in space even when they’re not occupied by astronauts. These extraterrestrial habitats can include stations in space, on the moon or on a planet like Mars. Most of the time these habitats would be unmanned, which means robots and support technology would be required to maintain their operation.

Purdue University’s multi-year project is part of the efforts of the Resilient Extra-Terrestrial Habitats institute (RETHi), a NASA-funded space technology research organization. The RETHi project is aimed at building technology that ensures that future space habitats have resilience to adapt to possible threats, awareness through sensors, as well as robotics to operate independently and in collaboration with humans.

The RETHi includes University of Connecticut, Harvard, the University of Texas at San Antonio, and Mississippi State University. Purdue is the lead partner for RETHi, which started officially in early 2020.

Suction Cup, Parallel Jaw Gripper and Soft Hand

The Purdue University team is developing three tools known as end effectors that can be attached to a robotic arm to undertake the kinds of tasks the human hand can accomplish naturally—such as manipulating objects, opening or closing them, or conducting basic maintenance.

The three end effectors for the robot are a suction cup gripper, a parallel jaw gripper and a two-finger soft hand for more delicate tasks. The team designed a mobile tool holder that contains the three end effectors. Each has a QR code that links to that item in the system software.

The three modular effectors would be accessible to the robot so that, when it has identified an object and its orientation and distance, it can then select the appropriate end effector to manipulate that object as needed to carry out the task.

How it Works

At the center of the technology development is machine learning to train the robots about different objects in their environments, and help them identify, segment, and classify the objects, as well as learning how to grip them.

As it goes about its tasks, the robot uses a built-in, RGBD depth-sensing camera to help it identify not only what an object is, but its orientation and how far away it is. The object could be something that’s very delicate or small (Cappelleri uses the example of a muffin, that would require a light touch) or a large flat item like an electrical panel, the handle on a drawer, or a switch.

Once the item and its orientation are identified, the robot selects the proper end effector, confirming its identity with a QR code scan. It then attaches the tool to the end of its movable arm, which creates a communication channel between that tool and the robot itself.

Communication Systems

To pass power and communications back and forth between the robot and the end effector, there is a small, embedded computer in in the wrist, as well as in each end effector.

The system then continuously analyzes the grasp placement, the proper grip or seal (in the case of the suction cup) and then where to move that item.

If a new object was introduced at the space station, the machine learning would enable the robot to recognize its similarity to other objects and thereby make a decision as to how it should be handled, and with which tool.

Synthetic Data Helps Train the ML System

One of the biggest challenges in the development of the machine learning algorithms was gaining enough data to train the robot, said Cappelleri. Therefore, the group generated synthetic data to help train the system.

That effort consisted of creating virtual scenes based on varying conditions and objects, to enable more basis for training. That was coupled with a dynamic simulation in which the robot could be tested to grasp an object in the virtual world, then they tested the simulated event in the lab with real end effectors. For instance, the robot could be trained to understand how a curvature on an object could affect a suction cup connection.

The research group has released the open source, SIM-Suction algorithm for the suction cup end effector, and training data, on GitHub so people can access it and build their own system to test it. With that access for other developers, Cappelleri pointed out “that lets people get their hands on it so they can start testing it out and using it themselves in their own applications.”

Commercial Applications

Next on the agenda is testing the gripper claw and two-finger hands.

The technology could serve commercial applications as well, said Cappelleri. Robots could use the technology to identify and manipulate inventory or assets in a warehouse or a factory. In fact, the same technology could enable even household tasks such as emptying a dishwasher by a robot.

In the long term, however, the benefits are likely to be achieved in space.

“It would be great if we can get this out to a [space station] that would be the ultimate goal,” Cappelleri said.