New neural tech could power insect-sized intelligent flying robots

Researchers have developed a neuromorphic vision-to-control mechanism that makes autonomous drone flights possible.

Neuromorphic systems mimic the brain’s biological processing via spiking neural networks.

For the project, a Delft University of Technology team in the Netherlands developed a five-layer spiking neural network comprising 28,800 neurons to process raw, event-based camera data. This network maps incoming raw events to estimate the camera’s 3D motion within its environment.

Researchers claim that compared to other vision-based drone flight systems that employ artificial neural networks, the new system is more compact and energy-efficient.

“The neuromorphic processor consumes only 0.94 watts of idle power and an additional 7-12 milliwatts while running the network,” said reseachers in a statement.

The vision-to-control pipeline enabled on a drone allowed it to autonomously control its position in space to hover, land, and maneuver sideways.

SNN-driven drone flight

Biological systems achieve low-latency and energy-efficient perception and action through asynchronous and sparse sensing and processing. In robotics, neuromorphic hardware, which incorporates event-based vision and spiking neural networks, aims to replicate these characteristics.

However, current implementations are limited to basic tasks with low-dimensional sensory inputs and motor actions. According to researchers, this limitation arises due to the constrained network size of existing embedded neuromorphic processors and the challenges involved in training spiking neural networks.

To illustrate the possibilities of neuromorphic hardware, researchers used a complete neuromorphic vision-to-control pipeline to fly a drone.

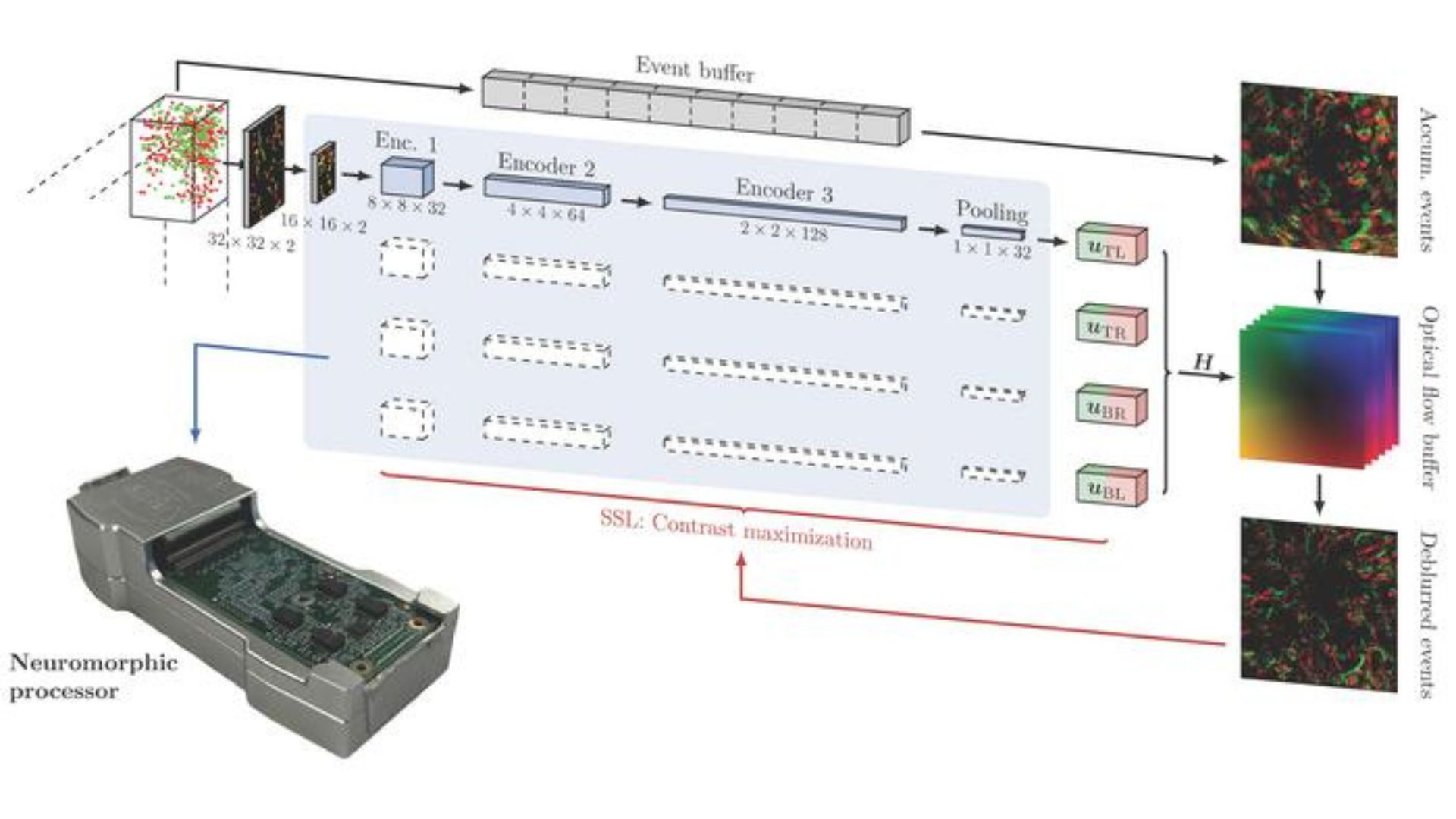

With the help of a spiking neural network (SNN) trained to process raw event camera data and produce low-level control actions, the pipeline can conduct autonomous vision-based ego-motion estimation and control at a frequency of about 200 Hz.

The learning setup’s fundamental feature is its separation of vision and control, which offers two significant benefits. Since the vision component was trained using raw events from the drone’s actual event camera, it first helps prevent the reality gap on the camera event input side.

The team opted for self-supervised learning to avoid the necessity of ground-truth measurements, which are challenging to acquire for event-based vision. Additionally, since the vision system outputs an ego-motion estimate, the control can be learned in a simple and highly efficient simulator.

This approach avoids the need to generate high-frequency, visually realistic images for event generation, which would otherwise result in excessively long training times in an end-to-end learning setup.

Efficient neuromorphic flight

The fully neuromorphic vision-to-control pipeline was implemented on Intel’s Loihi neuromorphic processor and used on a small flying robot for vision-based navigation. This system autonomously followed ego-motion set points without external aids.

A schematic of the hardware setup includes an event camera, neuromorphic processor, single-board computer, and flight controller. The drone, weighing 994 g with a 35 cm diameter, demonstrated smooth height reduction during landing experiments, indicated by a blue line for height and an orange line for optical flow divergence.

According to the study, to meet computational constraints, the system assumed a static, texture-rich, flat surface viewed by a DVS 240 camera. The vision processing pipeline reduced spatial resolution by focusing on corner regions of interest (ROIs), processing up to 90 events per ROI with a small SNN.

This SNN, with 7200 neurons and 506,400 synapses across five layers, estimated optical flow for each ROI. The vision network processed input events to optical flow at 200 Hz, and a linear controller translated these into thrust and attitude commands.

The system was trained using self-supervised contrast maximization for vision and an evolutionary algorithm for control. Real-world tests confirmed the pipeline’s performance, demonstrating robustness in various lighting conditions and efficient energy consumption compared to GPU solutions.

According to researchers, the positive results would help to further research on neuromorphic sensing and processing and may eventually make it possible to build intelligent robots the size of insects.

The details of the team’s research were published in the journal Science Robotics.

Study Abstract

Biological sensing and processing are asynchronous and sparse, leading to low-latency and energy-efficient perception and action. In robotics, neuromorphic hardware for event-based vision and spiking neural networks promises to exhibit similar characteristics. However, robotic implementations have been limited to basic tasks with low-dimensional sensory inputs and motor actions because of the restricted network size in current embedded neuromorphic processors and the difficulties of training spiking neural networks. Here, we present a fully neuromorphic vision-to-control pipeline for controlling a flying drone. Specifically, we trained a spiking neural network that accepts raw event-based camera data and outputs low-level control actions for performing autonomous vision-based flight. The vision part of the network, consisting of five layers and 28,800 neurons, maps incoming raw events to ego-motion estimates and was trained with self-supervised learning on real event data. The control part consists of a single decoding layer and was learned with an evolutionary algorithm in a drone simulator. Robotic experiments show a successful sim-to-real transfer of the fully learned neuromorphic pipeline. The drone could accurately control its ego-motion, allowing for hovering, landing, and maneuvering sideways—even while yawing at the same time. The neuromorphic pipeline runs on board Intel’s Loihi neuromorphic processor with an execution frequency of 200 hertz, consuming 0.94 watts of idle power and a mere additional 7 to 12 milliwatts when running the network. These results illustrate the potential of neuromorphic sensing and processing for enabling insect-sized.

ABOUT THE EDITOR

Jijo Malayil Jijo is an automotive and business journalist based in India. Armed with a BA in History (Honors) from St. Stephen’s College, Delhi University, and a PG diploma in Journalism from the Indian Institute of Mass Communication, Delhi, he has worked for news agencies, national newspapers, and automotive magazines. In his spare time, he likes to go off-roading, engage in political discourse, travel, and teach languages.