Nvidia, Powered by A.I. Boom, Reports Soaring Revenue and Profits

Nvidia, which makes microchips that power most artificial intelligence applications, began an extraordinary run a year ago.

Fueled by an explosion of interest in A.I., the Silicon Valley company said last May that it expected its chip sales to go through the roof. They did — and the fervor didn’t stop, with Nvidia raising its revenue projections every few months. Its stock soared, driving the company to a more than $2 trillion market capitalization that makes it more valuable than Alphabet, the parent of Google.

On Wednesday, Nvidia again reported soaring revenue and profits that underscored how it remains a dominant winner of the A.I. boom, even as it grapples with outsize expectations and rising competition.

Revenue was $26 billion for the three months that ended in April, surpassing its $24 billion estimate in February and tripling sales from a year earlier for the third consecutive quarter. Net income surged sevenfold to $5.98 billion.

Nvidia also projected revenue of $28 billion for the current quarter, which ends in July, more than double the amount from a year ago and higher than Wall Street estimates.

“We are poised for our next wave of growth,” Jensen Huang, Nvidia’s chief executive, said in a statement.

A doubling rather than tripling of revenue would reflect the way skyrocketing sales of A.I. chips began transforming Nvidia’s results a year ago. Those growth rates are expected to slow now that the initial ramp-up makes year-over-year comparisons tougher.

Nvidia’s shares, which are up more than 90 percent this year, rose in after-hours trading after the results were released. The company also announced a 10-for-1 stock split.

Nvidia, which originally sold chips for rendering images in video games, has benefited after making an early, costly bet on adapting its graphics processing units, or GPUs, to take on other computing tasks. When A.I. researchers began using those chips more than a decade ago to accelerate tasks like recognizing objects in photos, Mr. Huang jumped on the opportunity. He augmented Nvidia’s chips for A.I. tasks and developed software to aid developments in the field.

The company’s flagship processor, the H100, has enjoyed feverish demand to power A.I. chatbots such as OpenAI’s ChatGPT. While most high-end standard processors cost a few thousand dollars, H100s have sold for anywhere from $15,000 to $40,000 each, depending on volume and other factors, analysts said.

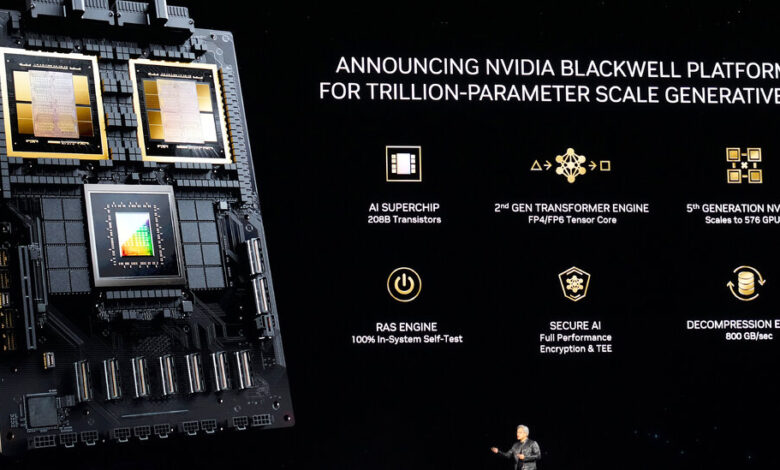

Analysts are debating the potential effects of a powerful successor to the H100, code-named Blackwell, which was announced in March and is expected to begin appearing in initial models in the fall.

Demand for the new chips already appears to be strong, raising the possibility that some customers may wait for the speedier models rather than buy the H100. But there was little sign of such a pause in Nvidia’s latest results.

Wall Street analysts are also looking for signs that some richly funded rivals could grab a noticeable share of Nvidia’s business. Microsoft, Meta, Google and Amazon have all developed their own chips that can be tailored for A.I. jobs, though they have also said they are boosting purchases of Nvidia chips.

Traditional rivals such as Advanced Micro Devices and Intel have also made optimistic predictions about their A.I. chips. AMD has said it expects to sell $4 billion worth of a new A.I. processor, the MI300, this year.

Mr. Huang frequently points to what he has said is a sustainable advantage: Only Nvidia’s GPUs are offered by all the major cloud services, such as Amazon Web Services and Microsoft Azure, so customers don’t have to worry about getting locked into using one of the services because of its exclusive chip technology.

Nvidia also remains popular among computer makers that have long used its chips in their systems. One is Dell Technologies, which on Monday hosted a Las Vegas event that featured an appearance by Mr. Huang.

Michael Dell, Dell’s chief executive and founder, said his company would offer new data center systems that packed 72 of the new Blackwell chips in a computer rack, standard structures that stand a bit taller than a refrigerator.

“Don’t seduce me with talk like that,” Mr. Huang joked. “That gets me superexcited.”