Nvidia Shows Why Generative AI Is Real – But Near-Term Correction Likely (NASDAQ:NVDA)

DNY59

We previously covered Nvidia Corporation (NASDAQ:NASDAQ:NVDA) in April 2024, discussing the uncertainty baked into its stock valuations and prices as the market awaited its FQ1 ’25 earnings results.

As the market reeled from the prolonged inflationary pain, we had recommended investors to buy the pullback then, due to the robust long-term capital appreciation prospects as the stock remained well-supported in the $620s (or post split $62).

Since then, NVDA has recorded an impressive +56.7% rally compared to the wider market at +7.1%, as the management reports double beat FQ1 ’25 results, offers promising FQ2 ’25 guidance, and announces the long-awaited 10-for-1 stock split.

With the management still guiding excellent FQ2’25 numbers and the market trend pointing to durable long-term generative AI demand, we believe that NVDA remains well poised to retain its AI market leadership.

Even so, with the market currently over exuberant and NVDA likely to report tougher YoY comparisons ahead, we believe that it may be more prudent to wait for a moderate retracement before adding. We shall further discuss why there may be a near-term correction.

Generative AI Is Getting Started In The SaaS Layer – Multi-Year Tailwinds Ahead

Without regurgitating NVDA’s recent double beat performance in FQ1 ’25, it is apparent that the generative AI boom has just started, with the infrastructure boom already starting to flow into the SaaS layer as reported by c3.ai (AI), Palantir (PLTR), and CrowdStrike (CRWD).

The same has been reported by OpenAI, with the CEO recently reporting annualized YTD revenues of $3.4B (+112.5% YoY), thanks to its growing subscription base with more to come, as Apple (AAPL) recently announced their Siri partnership.

Moving forward, we expect NVDA to continue reporting robust numbers, as more data center infrastructure companies/ REITs guide growing backlog and higher ASPs, implying the hyperscalers’ high spending power during the generative AI infrastructure boom.

Meta Platforms (META) has already guided FY2024 capex of $37.5B (+33.4% YoY), as part of their aggressive investments “to support our ambitious AI research and product development efforts.”

The same has been reported by Microsoft (MSFT), with it “on track to ramp CapEx over 50% year-on-year this year to over $50 billion” in 2024, along with the media speculation “to invest as much as $100B for a data center project” through 2030.

It is important to track these two companies’ AI-related spending indeed, since they account for the bulk of NVDA’s H100 orders in 2023 with more to come, as discussed above.

This is on top of the U.S. government’s recent push for $32B in AI-related emergency spending, with NVDA’s prediction of “Sovereign AI” – “a nation’s capabilities to produce artificial intelligence using its own infrastructure, data, workforce and business networks” likely playing out here.

Combined with the estimated market share of up to 95% in AI chips used for training and deploying Large Language Models (“LLMs”), we believe that NVDA remains well positioned to retain its AI leadership and robust top/ bottom-line performance ahead.

However, Potential Growth Headwinds Appear, With Near-Term Correction Likely

1. Inventory On Balance Sheet Grows As Lead Times Moderate & Redundancy Reported

On the one hand, NVDA has reported higher outstanding inventory purchases and long-term supply and capacity obligations totaling $18.8B in FQ1’25 (+16.7% QoQ/ +158.8% YoY), implying the management confidence to consistently deliver great sales ahead.

On the other hand, we are also observing higher inventory (finished goods) levels of $2.24B (+9.2% QoQ/ +19.7% YoY) on the balance sheet, as lead times narrow drastically to 2–3 months by April 2024. This is down from the 3–4 months reported in February 2024 and 8–12 months in November 2023.

While this development may be partly to Taiwan Semiconductor’s (TSM) doubled CoWoS capacity on a YoY basis by Q1 ’24, as “the demand is very high, extremely high,” it remains to be seen if NVDA may continue charting its robust top/ bottom-line performance ahead.

This is especially since we are starting to see some chip redundancy. For example, Tesla (TSLA) has opted to direct 12K units of H100 chips to the X AI business, to prevent them from “sitting in a warehouse.”

While this may be a singular event, we may see consumers placing orders as needed instead of doing so in advance, triggering reduced visibility into NVDA’s intermediate term top/ bottom lines.

This is given the shorter lead time – or, as highlighted by Barbara Jorgensen here, a relatively painful bullwhip effect:

When customers are on allocation they often over order. Currently, the supply chain is working down inventory from orders placed during the height of the global chip shortage. Once AI chip supply and demand are more in balance, buyers may get more chips than they need and then pull back just as new capacity is coming online. (EPS News.)

2. ASPs Are Too Expensive, As Observed In The Expanding Profit Margins – Triggering Intensified In-House AI Chip Developments

It is not a secret that many Big Tech companies have developed their own in-house chips, including TSLA’s Dojo D1, MSFT’s Azure Maia 100/ Cobalt 100 chips, META’s Artemis, and Amazon’s (AMZN) Trainium2, among others.

Most recently, AAPL has introduced

“Private Cloud Compute: a groundbreaking cloud intelligence system designed specifically for private AI processing, built with custom Apple silicon and a hardened operating system designed for privacy.”

These developments are unsurprising indeed, due to the massive benefits in having specialized chips that enhances individual enterprise’s highly specific needs.

Most importantly, it also allows these Big Tech companies to reduce their reliance on NVDA’s AI chips and the costs associated with the training of their AI models, given how expensive the GPUs have been.

As NVDA’s increasingly launch higher end AI chips, Blackwell GPU in H2’24 and Rubin GPU/ Versa CPU in 2026, we believe that prices may remain elevated – triggering intensified in-house AI chip developments.

For context, CEO Jensen Huang has highlighted that Blackwell will cost between $30K to $40K a piece, with the midpoint higher by +7.6% than H100 at $25K and $40K a piece.

The same has been observed in NVDA’s FY2025 adj gross margin guidance in the mid-70% range, elevated compared to FY2024 levels of 73.8% (+14.6 points YoY) and FY2020 levels of 62.5% (+0.8 points YoY).

Lastly, Taiwan Semiconductor (TSM) has hinted at the possibility of hiking prices for NVDA to better represent the former’s contribution during the ongoing generative AI boom, with the higher ASPs likely to be further passed on to customers.

As a result, it is unsurprising that MSFT’s CEO has highlighted that Advanced Micro Devices’ (AMD) Instinct MI300X AI accelerators provide the best “price-to-performance on GPT-4 inference” on the market, mirroring the positive sentiments offered by Lenovo (OTCPK:LNVGY) in a recent interview.

3. Tougher YoY Comparison, As Growth Decelerates

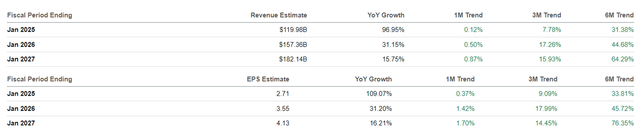

For now, NVDA continues to guide excellent FQ2’25 revenues of $28B (+7.5% QoQ/ +107.4% YoY), well exceeding the consensus estimates of $26.84B.

This is on top of the extremely robust demand, supporting its still-rich adj gross margin guidance of 75.5% (-2.9 points QoQ/ +4.3 YoY/ +15.4 from FQ2 ’20 levels of 60.1%), despite the notable QoQ headwinds.

Even so, based on the consensus FQ3’25 revenue estimates of $31.27B (+11.6% QoQ/ +72.5% YoY), it seems that NVDA’s growth rate may face tougher YoY comparisons, as the triple digit YoY growth rates give way to double-digit YoY growths.

While we agree that NVDA’s high double-digit YoY growths remain extremely impressive compared to its peers’ single digit YoY growths, including AMD and Intel (INTC), we are uncertain how long these may last as discussed in section 1 and 2 above.

As a result, we believe it may be more prudent for investors to temper their near-term expectations, especially since two direct customers (A&B) represented 24% of its overall revenues, likely MSFT and META, with another indirect customer representing 10% or more in FQ1’25.

Once this replacement cycle ends, there may be moderate volatility in NVDA’s top-line growth, attributed to the relatively high customer concentration.

So, Is NVDA Stock A Buy, Sell, or Hold?

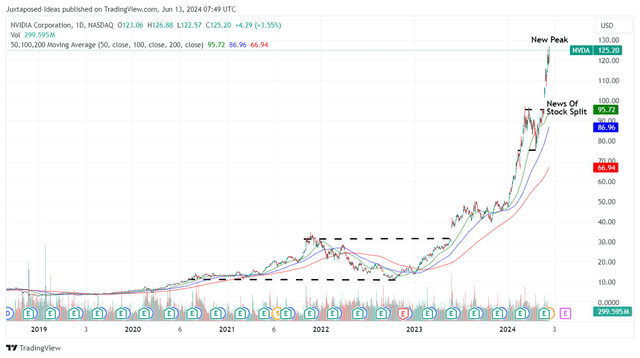

NVDA 5Y Stock Price

For now, NVDA has charted a new peak of $125s after the market rewards the stock from the double beat FQ1 ’25 performance, promising FQ2 ’25 guidance, and the recently completed 10-for-1 stock split.

For context, we had offered a fair value estimate of $494.40 in our last article (or post split $49.44), based on the FY2024 adj EPS of $12.96 (or post split $1.29) and previous 1Y P/E mean valuations of 38.15x.

This is on top of the long-term price target of $1.37K (or post split $137), based on the previous consensus FY2026 adj EPS estimates of $36.10 (post split $3.61).

The Consensus Forward Estimates

Despite the premium observed against our previous fair value estimates, there remains an excellent upside potential of +25.7% to our raised long-term price target of $157.50, based on the raised consensus FY2026 adj EPS estimates to $4.13 and the same P/E valuation.

For now, while we are reiterating our long-term Buy rating, it may be more prudent to observe NVDA’s movement for a little longer and wait for a moderate pullback for an improved upside potential.

This is especially since the stock is currently riding a new high from the recently completed stock split, as many insiders also unlocked great gains of $220M in Q2’24 (+193.3% QoQ/ +49.6% YoY).

Patience may be more prudent here, with the three factors discussed above potentially triggering a more attractive entry point, depending on the individual investor’s dollar cost averages and risk appetite.

While some analysts may have recommended a sell rating here, we do not believe in timing the market for NVDA, with the robust growth prospects warranting a Buy and Hold status in every growth oriented investor’s portfolio.