OpenAI co-founder Ilya Sutskever announces rival AI start-up

Stay informed with free updates

Simply sign up to the Artificial intelligence myFT Digest — delivered directly to your inbox.

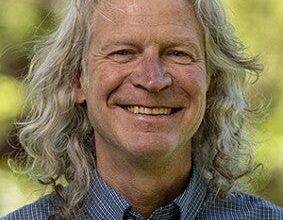

OpenAI’s co-founder Ilya Sutskever is starting a rival AI start-up focused on “building safe superintelligence”, just a month after he quit the AI company following an unsuccessful coup attempt against its chief executive Sam Altman.

On Wednesday, Sutskever, one of the world’s most respected AI researchers, launched Safe Superintelligence (SSI) Inc, which is billing itself as “the world’s first straight-shot SSI lab, with one goal and one product: a safe superintelligence”, according to a statement published on X.

Sutskever has co-founded the breakaway start-up in the US with former OpenAI employee Daniel Levy and AI investor and entrepreneur Daniel Gross, who worked as a partner at Y Combinator, the Silicon Valley start-up incubator that Altman used to run.

Gross has stakes in the likes of GitHub and Instacart, and AI companies including Perplexity.ai, Character.ai and CoreWeave. Investors in SSI were not disclosed.

The trio said developing safe superintelligence — a type of machine intelligence that could supersede human cognitive abilities — was the company’s “sole focus”. They added it would be unencumbered by revenue demands from investors, such as calling for top talent to join the initiative.

OpenAI had a similar mission to SSI when it was founded in 2015 as a not-for-profit research lab to create superintelligent AI that would benefit humanity. While Altman claims this is still OpenAI’s guiding principle, the company has turned into a fast-growing business under his leadership.

Sutskever and his co-founders said in a statement that SSI’s “singular focus means no distraction by management overhead or product cycles, and our business model means safety, security, and progress are all insulated from short-term commercial pressures”. The statement added that the company will be headquartered in both Palo Alto and Tel Aviv.

Sutskever is considered one of the world’s leading AI researchers. He played a large role in OpenAI’s early lead in the nascent field of generative AI — the development of software that can generate multimedia responses to human queries.

The announcement of his OpenAI offshoot comes after a period of turmoil at the leading AI group centred on clashes over leadership direction and safety.

In November, OpenAI’s directors — who at the time included Sutskever — pushed Altman out as chief executive in an abrupt move that shocked investors and staff. Altman returned days later under a new board, without Sutskever.

After the failed coup, Sutskever remained at the company for a few months but departed in May. When he resigned he said he was “excited for what comes next — a project that is very personally meaningful to me about which I will share details in due time”.

This is not the first time OpenAI employees have broken off from the ChatGPT maker to make “safe” AI systems. In 2021, Dario Amodei, a former head of AI safety at the company, spun off his own start-up, Anthropic, which has raised $4bn from Amazon and hundreds of millions more from venture capitalists, at a valuation of more than $18bn, according to people with knowledge of the discussions.

Although Sutskever has said publicly that he has confidence in OpenAI’s current leadership, Jan Leike, another person who has recently quit and who worked closely with Sutskever, said his differences with the company leadership had “reached a breaking point” as “safety culture and processes have taken a back seat to shiny products”. He has joined OpenAI rival Anthropic.