PC-based AI and LLMs | Pipeline Magazine

By: Brian Case, Mark Cummings, Ph.D.

Generative AI (GenAI) Large Language Models (LLMs) can run with okay single-user performance on today’s notebook computers. This has very important implications. First, it promises to give

individual users a great increase in personal power for both good and for bad. Second, it foreshadows a world where GenAI is everywhere and in everything including routers, switches, home

controllers, industrial systems, the electrical grid, water systems, etc. Given all the press about Nvidia’s very expensive specialized chips that companies are fighting to buy, this assertion

may be a little surprising. To help understand this sea change, we provide a little background, the results of some real-world tests, and a beginning of a discussion on implications.

Before LLMs, AI applications were built on a variety of Machine Learning (ML) model architectures, each focused on one specific task. With the advent of LLMs, GenAI has been shown to be able to

do all the things that those single purpose AI systems could do and more.

LLM performance is typically measured along two axes: 1) the speed of output, called inference performance (how fast it generates a response to a prompt); and 2) the quality of their output,

referred to as accuracy (the “intelligence” of the response). Model accuracy is typically a function of characteristics that are fixed during training, which is before inference happens. These

characteristics include model size, nature of the training data, goals of fine tuning (including alignment), and so on. These characteristics affect, in general, the amount of knowledge stored in

the LLM. Here we are interested in the inference speed achievable with commodity hardware running trained open-source LLMs.

The LLM revolution was triggered by a key invention known as attention, which allows a model to understand the relationships of words in sentences, elements of pictures, sounds in audio, and

so forth. This invention resulted in the transformer architecture, described in the seminal paper Attention is All You

Need; this architecture is used in modern LLMs. A transformer, like most other modern AI algorithms, is a machine-learning model implemented as a DNN (Deep Neural Network). LLM

capabilities scale with model size, which is determined by so-called hyper-parameters such as the number of transformer layers, token embedding size (dimensionality of semantics for each token),

number of attention heads, etc. For inference, these hyper-parameters are interesting, but they are “baked in” to the model during training and are not, generally speaking, modifiable.

To improve inference speed, a process known as post-training quantization reduces the number of bits that represent each weight (a weight is also called a parameter), which is typically a

floating-point value. These weights are a result of training, fine tuning, etc. Since even a small LLM has over a billion weights, a reduction in the number of bits per weight has a big impact on

storage requirements and performance. Up to a point, reducing the number of bits (the degree of quantization) usually increases the speed of inference with only minor effects on accuracy.

In contrast to inference, LLM training is a very resource intensive task and is not considered here. Rather, our focus is on the use of LLMs, which means inference.

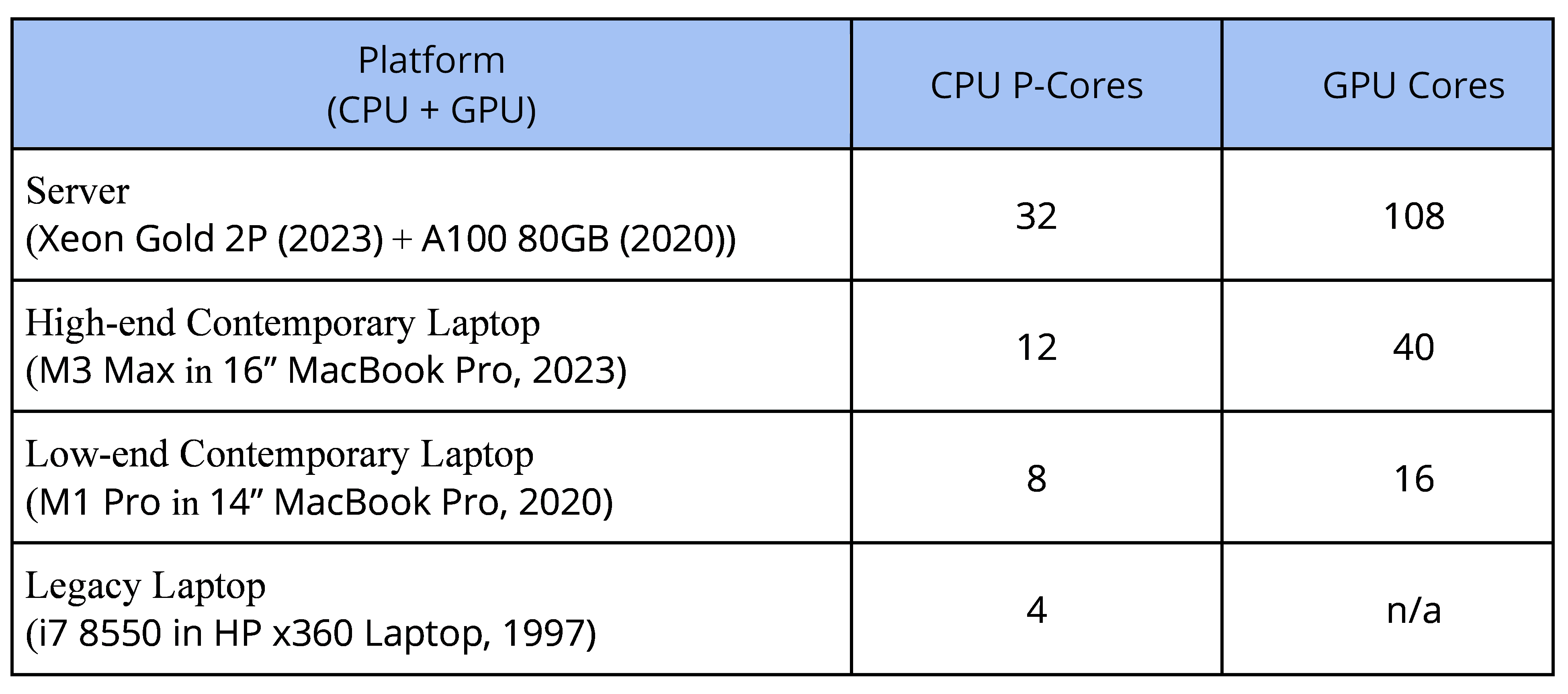

Table 1. Hardware platforms used in this work.

Here, we show that LLM inference is already feasible on commodity hardware, specifically laptops. This is important because history shows that what runs on today’s laptops will run on

tomorrow’s smartphones, tablets, IoT devices, etc. To illustrate our