Planning Migrations to successfully incorporate Generative AI

The recent rise of generative artificial intelligence (generative AI) solutions presents challenges to migrations that are in flight and to migrations that are just beginning. The business problem is that generative AI complicates cloud migrations by introducing additional risks related to data isolation, data sharing, and service costs. For example, the US Space Force has paused all generative AI implementations until risks related to data aggregation are addressed. As generative AI is a relatively recent technological advancement, data isolation and lifecycle concerns may not have been considered in migration planning. The desire to use generative AI may accelerate cloud migrations, and planners may lack awareness of potential data risks.

This blog explores the technical controls and business solutions offered by AWS to mitigate these problems through technical services. It also summarizes three approaches to ensure generative AI does not negatively impact cloud migration plans:

- Engage the Cloud Center of Excellence (CCoE) in generative AI data governance.

- Organize, document, and approve the data architecture supporting generative AI initiatives.

- Revisit cloud cost and utilization estimates in the context of generative AI. Many customers utilize AWS architecture and services to achieve these goals.

Technical Controls

Taking a proactive governance approach reduces data risks and builds trust with business leaders looking to leverage generative AI. To effectively govern generative AI data, leverage these AWS services and technical capabilities:

- Amazon Bedrock: Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies. Bedrock provides a broad set of capabilities to build generative AI applications, simplifying development while maintaining privacy and security. The default architecture of Amazon Bedrock isolates the service itself within AWS owned and operated accounts (Figure 1). If customers run a model customization job, that model is copied to the customer account and is private. The customer model and input data are never used to train other models. We recommend that the copy be in a dedicated VPC.

- Amazon Virtual Private Cloud (VPC): Services can be isolated within an Amazon VPC where all networking configuration is controlled by the customer.

- AWS Accounts: AWS accounts are a security boundary, and data held within one account is not accessible to other accounts by default (Figure 2).

- Amazon Simple Storage Service (Amazon S3) Bucket Policies: S3 Bucket Policies allow explicit and fine-grained access controls to restrict data (Figure 1 and Figure 2).

- Encryption: All calls to the Bedrock API, either programmatically or within the AWS console, are encrypted. Amazon Bedrock data is encrypted by default with the AWS Key Management Service (KMS). Customers can utilize a customer managed key if desired. Please review the Amazon Bedrock User Guide for more on this topic: Encryption of model customization jobs

Customers may choose to run Amazon Bedrock in a single account (Figure 1) or in a multi-account architecture (Figure 2).

Figure 1- Example Data Isolation Architecture in a Single Account

To ensure separation of data from migration infrastructure, configure access to Amazon Bedrock from one or more dedicated Amazon VPC’s (Figure 1). Data access for generative AI should be controlled via an S3 VPC Endpoint and an associated customer endpoint policy. The endpoints and policies restrict access to the appropriate buckets. Combined with a bucket policy on the source S3 buckets, data can be isolated to the appropriate roles and services utilizing the Amazon Bedrock service.

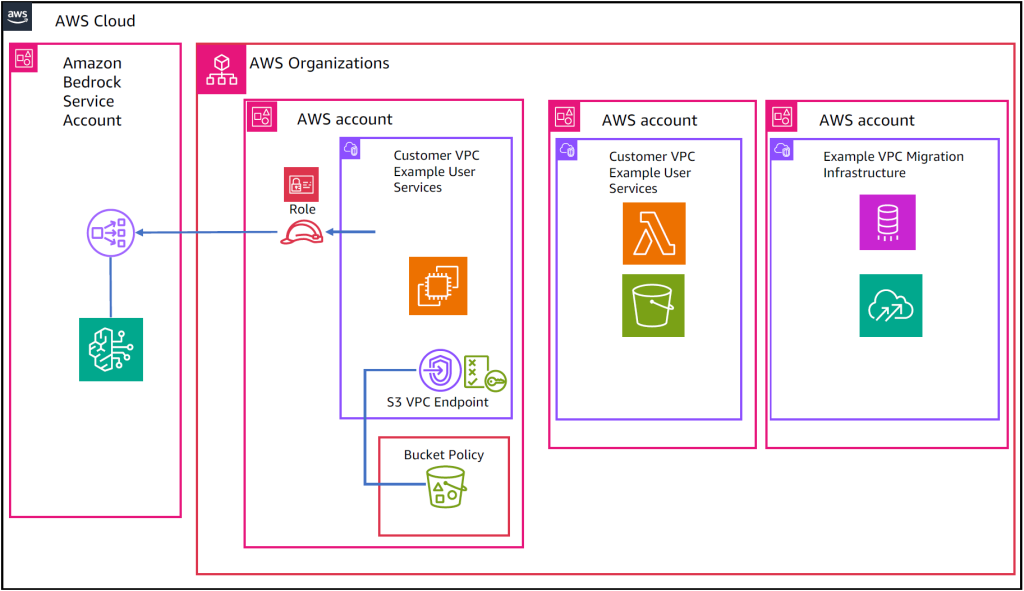

Figure 2 – Example Data Isolation Architecture using Multiple Accounts

Implementing a multi-account structure with AWS Organizations (Figure 2) adds an additional level of control over generative AI data. This architecture provides the same security controls as the single account architecture, as well as adding capabilities for account isolation and service control. Customers can dedicate an AWS account to Amazon Bedrock and control access to that account via cross-account roles, ensuring only a trusted principal can access the service. AWS Organizations can also block the Amazon Bedrock service from other accounts via Service Control Policies.

Business Solutions

Engage the CCoE

Elevate generative AI discussions within the Cloud Center of Excellence (CCoE). Generative AI is a capability that supports Line of Business solutions as well as a generalized corporate strategy. It fits squarely in the cloud implementation governance function of the CCoE. The CCoE serves as the central governance body overseeing an organization’s cloud strategy, adoption, and implementation. The CCoE must take an enterprise-wide view of how emerging technologies like generative AI may affect current and future cloud migration plans. Often, new technologies are seen as point solutions for specific lines of business, but generative AI has broad implications that need to be examined holistically. When different internal stakeholders have different goals around a new technology, the governance function of the CCoE becomes crucial to organizing and achieving strategic outcomes.

The CCoE needs to analyze how generative AI solutions can integrate into existing cloud architectures and migration roadmaps. This includes identifying dependencies between generative AI and target cloud platforms, as well as policy and compliance factors. With rapid cadences of updates to generative AI services, the CCoE must stay on top of product changes and adjust migration plans accordingly. If an organization does not have a CCoE or equivalent body, AWS can provide guidance on best practices.

As part of ongoing cloud migration governance, the CCoE should open or revisit questions around data security, compliance, access management, and jurisdictional data concerns. For data destined for generative AI training, they need to clarify policies regarding data anonymization, synthetic data, and technical safeguards around digital privacy. Large Language Models (LLM) and Foundation Models (FM) are trained with a vast amount of input data. If organizations are customizing their models, data may need to anonymized to ensure that confidential data is not used and digital privacy policies are considered. This is because LLMs are not databases and it is extremely difficult to remove data from a trained model. Awareness of policies is imperative given privacy regulations (e.g. GDPR) that restrict cross-border data flows. Examples of confidential data are health data, employee data, or any sensitive documentation. Synthetic data is artificially created data used for Machine Learning (ML) and is a solution to concerns with confidential data.

Organize generative AI Architecture

Organizations should raise questions regarding data migration, data sovereignty, and data governance in the context of generative AI. Migrations move data and services from one place to another. Generative AI services such as Amazon Bedrock have data isolation built into the base architecture (Figure 1 and Figure 2). This is not true of all generative AI providers. While foundational data governance practices still apply, generative AI introduces new complexities in regards to rights, access, and jurisdiction over data. While some generative AI services provide data isolation architecture, broader data governance strategies are still needed. As utilization of generative AI services grow and impact cloud migration plans, the organization needs to update data management policies. Customers need to know how they will manage architecture that supports both newly generated data and data used to train generative AI.

Update Migration financial estimates

Finally, adopting generative AI solutions may alter the cost structure of cloud migrations. Changing costs requires business leaders to realign stakeholder expectations and ensure migration buy in. Many migrations are planned based on transformational targets like rehosting workloads or refactoring monolithic apps. The cost estimates for these targets factor in the level of effort to re-platform or re-architect on the cloud. Integrating generative AI capabilities may require additional development and services that increase migration costs beyond original estimates. To proactively address concerns of service costs and utilization, use AWS Tagging. Ensure that a tagging architecture developed for the migration will account for generative AI spend.

Final Thoughts

To get started, migration planners should institute open communication channels with migration sponsors and budget owners to discuss generative AI. Sponsors and other stakeholders must understand use cases that may impact the cloud migration. As generative AI use cases continue to emerge, the CCoE can provide realistic assessments of the added migration costs. This sets clear expectations around what can be achieved within current budgets versus those that require additional funding. The CCoE can help prioritize high-value generative AI integrations over nice-to-haves. Building awareness of the cost impacts enables stakeholders to properly resource migrations as new technologies like generative AI continue to evolve.

Cloud migration governance is essential in harnessing the promise of generative AI while avoiding potential pitfalls. Elevating generative AI discussions in the CCoE, revisiting migration costs, and iterating on cloud architecture are three ways organizations can respond. The CCoE serves as the connective tissue that brings these activities together under coordinated governance. They are empowered by AWS architectural tools and services that can build guardrails and empower generative AI solutions. With proactive planning, enterprises can fully leverage generative AI and cloud synergies to accelerate their business transformation.

For more information, review the following references:

How Amazon Bedrock works with IAM

About the Author