Releasing a new paper on openness and artificial intelligence

For the past six months, the Columbia Institute of Global Politics and Mozilla have been working with leading AI scholars and practitioners to create a framework on openness and AI. Today, we are publishing a paper that lays out this new framework.

During earlier eras of the internet, open source technologies played a core role in promoting innovation and safety. Open source technology provided a core set of building blocks that software developers have used to do everything from create art to design vaccines to develop apps that are used by people all over the world; it is estimated that open source software is worth over $8 trillion in value. And, attempts to limit open innovation — such as export controls on encryption in early web browsers — ended up being counterproductive, further exemplifying the value of openness.

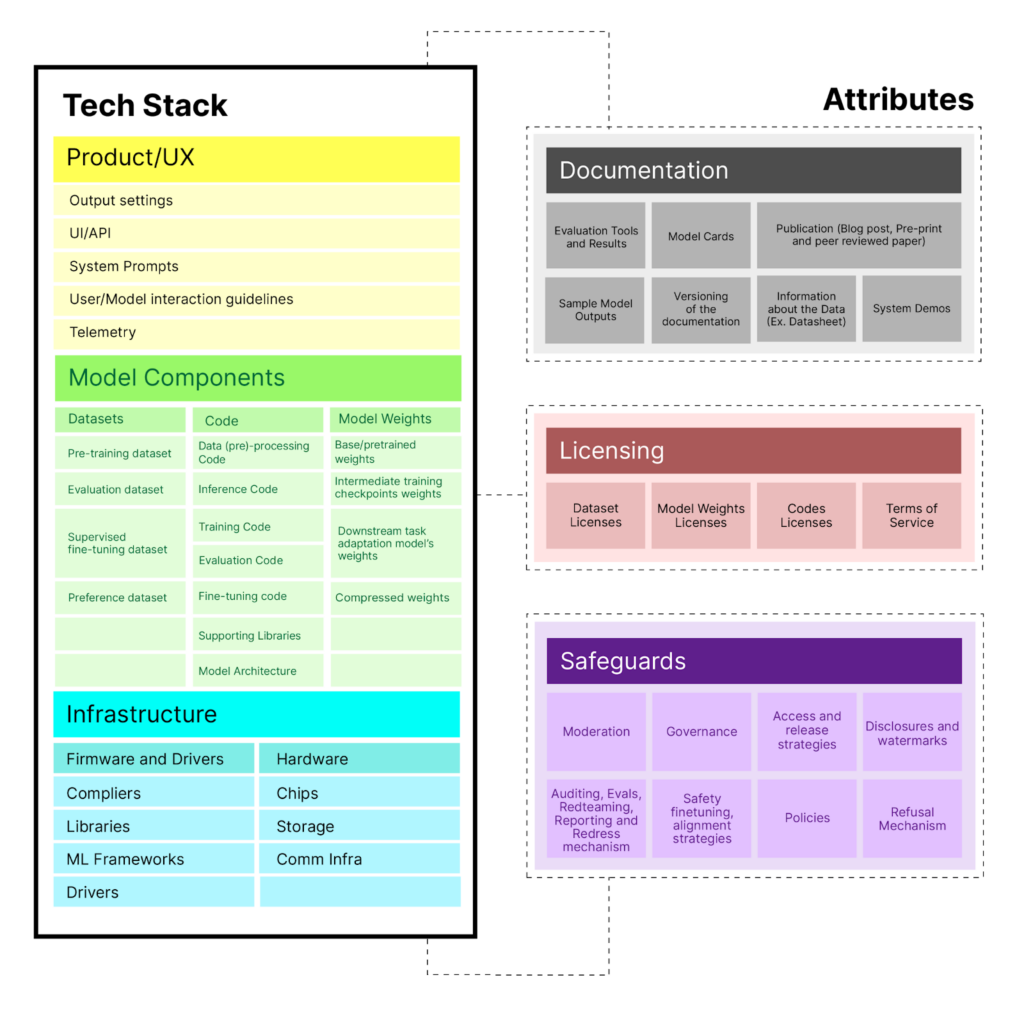

The paper surveys existing approaches to defining openness in AI models and systems, and then proposes a descriptive framework to understand how each component of the foundation model stack contributes to openness.

Today, open source approaches for artificial intelligence — and especially for foundation models — offer the promise of similar benefits to society. However, defining and empowering “open source” for foundation models has proven tricky, given its significant differences from traditional software development. This lack of clarity has made it harder to recommend specific approaches and standards for how developers should advance openness and unlock its benefits. Additionally, these conversations about openness in AI have often operated at a high level, making it harder to reason about the benefits and risks from openness in AI. Some policymakers and advocates have blamed open access to AI as the source of certain safety and security risks, often without concrete or rigorous evidence to justify those claims. On the other hand, people often tout the benefits of openness in AI, but without specificity about how to actually harness those opportunities.

That’s why, in February, Mozilla and the Columbia Institute of Global Politics brought together over 40 leading scholars and practitioners working on openness and AI for the Columbia Convening. These individuals — spanning prominent open source AI startups and companies, nonprofit AI labs, and civil society organizations — focused on exploring what “open” should mean in the AI era.

Today, we are publishing a paper that presents a framework for grappling with openness across the AI stack. The paper surveys existing approaches to defining openness in AI models and systems, and then proposes a descriptive framework to understand how each component of the foundation model stack contributes to openness. It enables — without prescribing — an analysis of how to unlock specific benefits from AI, based on desired model and system attributes. Furthermore, the paper also adds clarity to support further work on this topic, including work to develop stronger safety safeguards for open systems.

We believe this framework will support timely conversations around the technical and policy communities. For example, this week, as policymakers discuss AI policy at the AI Seoul Summit 2024, this framework can help clarify how openness in AI can support societal and political goals, including innovation, safety, competition, and human rights. And, as the technical community continues to build and deploy AI systems, this framework can support AI developers in ensuring their AI systems help achieve their intended goals, promote innovation and collaboration, and reduce harms. We look forward to working with the open source and AI community, as well as the policy and technical communities more broadly, to continue building on this framework going forward.