Sanctuary AI CEO – the robots really are coming! Thanks to transformer AI

Hardware robotics and AI have long been on parallel journeys, with AI in the fast lane and robotics in the slow. This decade, AI has moved at internet speed (witness the last 18 months’ progress in generative systems alone), while robots have been lumbering along for years, slowly finding their feet as one engineering challenge gives way to the next.

Some robots can now walk, run, jump, and climb stairs, but so what? What are they for?

But all that is set to change as the term ‘robotics’ is gradually replaced by another: ‘embodied AI’, a sign that the technologies’ separate tracks are merging. The speed and breadth of AI development this decade has pulled robotics into its slipstream on a shared journey towards the future.

Robots of every kind are now becoming the means by which AI interacts with the physical world, while AI is fast becoming the way that robots understand that world – at a speed that even one industry CEO finds surprising. As a result, the robots may finally be coming, and in a form that is surprisingly close to our century-old science-fiction conception: intelligent artificial beings that can carry out any task.

When I last spoke to Geordie Rose, CEO of Canadian robotics firm Sanctuary AI, he was dismissive of moves by the likes of Engineered Arts to put ChatGPT into robots, as the British company did with its Ameca entertainment humanoid last year. Rose described that idea as “trivial”, little more than a “parlour trick” to simulate intelligence and wow punters at trade fairs: the robot as ventriloquist dummy, perhaps. It was something any coder could do in 30 minutes, he said.

But a lot has happened at Sanctuary AI since we spoke last summer. Just this month, for example, there has been an undisclosed investment from Accenture; a partnership with automotive manufacturing giant Magna; and the rollout this week of version 7.0 of its Phoenix humanoid.

But most of all, AI has been at the heart of the company’s shift into the fast lane, and towards real enterprise use cases. Rose says:

The tremendous advances in artificial intelligence have completely transformed things for us. The remarkable thing is that these advances are directly applicable to robotics, which was a surprise even to me!

The investment in AI, the breakthroughs on the algorithmic side, and the understanding of how much data you need to make these models work…all that stuff is the main difference between when we last spoke and today.

In our second 2023 conversation, Rose pooh-poohed the use of Large Language Models [LLMs] and ChatGPT in robotics. Given everything that has happened since, was he wrong to do so? He says:

No, it was a parlour trick! But the broad category of AI model that is applicable to robots is similar to the way that LLMs work. The central premise is that you train a model to predict the next thing that will happen. So, in language, that would be the next word, for example. But for robotics it is all about unrolling into the future.

[With LLMs] you can think about a sentence as the next word, the next word, and the next. So, language models are able to continually unpack into the future and generate coherent text. But now think about your own experience in the physical world instead. There, instead of text, you have all the data from your senses and actions: vision, hearing, touch, and proprioception. And you have movement, or how you change where your body is in the world.

All those things can be thought of as simply data. And if you collect enough data – experiential data from the perspective of a person or robot – then you can do the same type of thing that an LLM does. You can use that data to train those models, where movement is now a series of predictions about where your body – or a robot’s body – will be.

So, you move a robot in the same way that you would generate a sentence. And you use that data to predict what will happen in the future – assuming that what will happen is similar to the data you already have.

He adds:

One way to model human intelligence is simply to capture all the data from the experience of a person, or a robot. And if you collect enough of it, then it is essentially seeing everything there is, so it can predict what should happen next, given the context.

The thesis is that this is one way to get to real artificial general intelligence [AGI], which is the goal of Sanctuary.

As it is for OpenAI and others, of course. So, you can see how the once-separate goals of robotics and AI companies are now aligned – and why it is ‘Sanctuary AI’, not ‘Sanctuary Robotics’.

From his explanation, therefore, it seems that Rose is essentially talking about applying transformer AI models to humanoid robots (which could also embody LLMs, where necessary to receive or impart information). He says:

Yes, or sequence-to-sequence models, where the first element of the sequence is in the past and the second is what should happen in the future, conditioned by what you just saw.

For us, transformer models are a type of sequence-to-sequence model that has a bunch of innovation attached to it. One of the most important elements is the attention model – learning what about the past is most relevant in a given context. Again, in language, that might mean picking out certain tokens or words that are more important to an answer than others.

But with embodied cognition or embodied AI – robots – attention is even more important, because there’s a lot more data flowing in, which you have to make sense of in a very short space of time. For example, if I’m standing on the savannah and a lion is coming towards me, that is more important visual input in that context than the how the trees and clouds are moving!

And even with other models, like diffusion [in generative images], the central premise is the same. And that is, with data – or mostly from data – you can use what just happened to predict what will happen next. And from there, you can get to what you want.

In robotics, these models are supposed to give a machine enough information about the world to know what to do next to achieve a goal. Then you reach a new past, in that sequence, which is what actually happened, and not necessarily what you thought would happen.

Then he makes a bold, and in some ways shocking, prediction:

And you keep doing this into the future and, in some ways, end up creating a living, sentient being.

What does he mean by that?

On its own, an LLM creates the illusion that a system is understanding what you’re saying, and it responds in the same way. But the types of models I describe are doing something similar with behaviours.

Now, it’s not a person; it’s a robot. But there’s an increasing conviction in the industry that, just by collecting enough data of every kind from robots, you might be able to get to true AGI. Not the kind of washed-out, defeatist version of AGI that some people are pushing, which is just language based, but actual intelligence of the sort that humans possess: the ability to navigate the world and predict it.

Two sides of the same coin

So, in less than a year, Rose has gone from being dismissive of a certain type of AI to believing that the broad category of sequence-to-sequence or transformer models is critical to shifting robots’ development into the fast lane, and into the future.

Not only that, but he is suggesting that AGI will naturally emerge from this process over time. And give rise not to a conscious, sentient being, perhaps, but a model of one that is so accurate – and perhaps superior, given the vast amount of data it would have access to – that the distinction becomes irrelevant.

A challenging thought, as it encroaches on many humans’ belief in souls, and in being the apex of creation. The lurking implication that we might each just be a one-off biological transformer made from our parents’ genes may threaten our collective peace of mind.

Existential nightmares aside, why would AGI be necessary for a machine that might, for example, only carry out routine, repetitive tasks? What would human-like intelligence add to such a machine in hard commercial terms? What functions might it then perform? And, frankly, why not just employ a person?

Rose says:

On the question of why you’d want AGI to emerge in robots, even in very controlled work environments, a tremendous amount of judgement is necessary, and adapting to new circumstances. But humans don’t notice it, because we’re so good at it!

Even something as simple as an object that I’m supposed to pick up and put in a box is not always in exactly the same place. That type of scenario requires generalization, which means you have to understand the world well enough to know ‘what is a cup?’ and ‘where is the cup?’ and be able to adapt your actions accordingly.

And all of that is the crack in the doorway that lets in the light of general intelligence. Once you have started solving problems like that, you’ve begun a journey that is going to end in something that’s like a person. It’s inevitable.

So, the need for AGI in the sort of robots that we’re interested in is absolutely critical. You can’t build a humanoid that generates value in the world unless you’ve built a software control system that has general intelligence.

I have explored the need for some machines to have a humanoid form factor in previous reports: essentially, it is because they would have to work in a world that has been designed for, and by, humans. But beyond the inevitability, in Rose’s view, of robots becoming intelligent, why even embark on that journey?

He says:

With an intelligent humanoid, you could put it in any situation you can imagine, and it would figure it out. But without pairing a humanoid with a general intelligence, that whole idea falls apart.

Part of the reason why people have such a hard time wrapping their heads around the humanoid form factor is they don’t understand the fundamental connection between these things. Neither a human-like machine, in design terms, nor an AGI on its own is going to give us great value.

If you just build an AGI that’s a digital thing, it’s going to be trapped in the digital world and won’t be able to understand our world in the way we do. For example, an AI that’s just trained on text can never understand what that text actually means. It can’t know what a rose smells like!

To understand everything about the world you need to be in the world. So, AGI and embodiment go together. They are two sides of the same coin, in that neither makes sense without the other.

Even so, it seems extraordinary that the CEO of a robotics and AI company has experienced a Damascene conversion on what, previously, he had believed was a long and arduous road. He admits:

I’m surprised at how well data-driven approaches work. My original idea was, ‘you’re going to have to build this complex brain, a cognitive architecture with all these different parts and technologies interweaving’. But now it looks like we might be able to avoid all that and just use data!

But that is alarming in one sense, because it may reflect on how humans work as sentient, intelligent beings. We may not be as complicated as everyone thinks, but just a simple algorithm! Now, I don’t think that’s true, by the way. But it makes you think about the possibilities.

More of them than us

Having started down this road, Rose now believes that humanoid robotics is no longer the market that cynics thought it was – faddy, impressive, entertaining, but ultimately expensive and pointless – but the biggest opportunity on Earth. He explains:

What if we could build a machine that is just like a person? Why wouldn’t that be valuable? And if you didn’t participate in that transformation, then you would be like the horse and buggy guys back when cars were introduced.

It’s just a matter of timescale. Eventually, if this vision comes to pass, these things will be everywhere. There’ll be more of them than people on the planet!

But why? Why not just train and employ people?

Imagine you had a machine that could do whatever you needed it to – build your house, mow your lawn, and tell you about legal things, while having all of the medical profession’s knowledge at its fingertips – literally. Imagine you had the world’s best lawyer, doctor, teacher, engineer, and scientist, and you could have it in your house for the cost of a car. Why wouldn’t you? And it would be much more valuable than a car!

Then eventually, the robots would be the ones populating the Moon or Mars – it’s not going to be people out there. And if we then wanted to explore the universe further, obviously it’s going to be machines, because we couldn’t survive the trip.

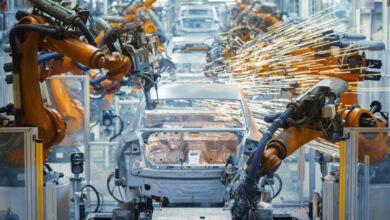

But long before then, robots will appear in factories. Hence the partnership with automotive giant Magna, which is working with Sanctuary AI to develop an evolution of Phoenix – now at version 7.0 – which can work in the sector. And hence the investment from Accenture, which now sees real enterprise potential in the idea.

Rose says:

First, it’s going to be manufacturing and logistics. Plus, ecommerce distribution centers, where there are already thousands of robots. This will simply be a new kind of robot that does things a little differently. That’s how it will start. And how it will grow.

Then, in those environments, the systems will continually iterate to get better and better. And at some point, the technology will be good enough to start using it for things that are not in such highly structured environments.

So, is Elon Musk on the right path with Tesla’s Optimus humanoids, which some reports suggest could be on sale before the end of this year?

Rose laughs and says:

Anybody who tries to take a technology like this and penetrate the home with it first is going to flame out and die. That is a terrible idea!

My take

When it comes to robots, we might have the moon. But your living room? That’s a step too far – for now. In the meantime, consider this: the real transformers are us. Who knew?