Search will never be the same

If you’ve been online since the mid-90s, you’ll remember the chaos of pre-Google search, which is probably best exemplified by Dogpile, a search engine that literally searched all the other search engines for you, to give you some hope of finding what you wanted.

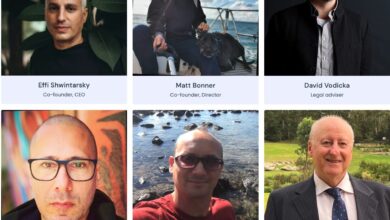

Google is the colossal multinational octopus it is today because way back then, Page and Brin got search right. Google instantly became a verb, and has owned around 90% of humanity’s access to the wider Web for the last two and a half decades. Google Search has evolved continually over this time, but as the AI dawn breaks in 2024, and Google watches OpenAI continually throwing grenades into entire industries, its flagship product is about to undergo the biggest shift in its history.

“Now, with generative AI, search will do more for you than you’ve ever imagined,” said Head of Search Liz Reid at Google’s I/O keynote yesterday. “Whatever’s on your mind, whatever you need to get done, just ask, and Google will do the googling for you.”

Generative AI Overviews become the backbone of Search

Essentially, this update, which is beginning to roll out in the USA and will soon expand globally, fundamentally shifts the way Google Search presents information. Search, Google has decided, will now run through its Gemini AI. Instead of searching for keywords, Google hopes people will start asking it questions. And instead of putting together a simple, clean list of links, the new Google AI search will attempt to draw together information from across the Web to answer that question with a little customized report called an AI Overview.

In many cases, it’ll go find the information you’re after and bring it back in a succinct summary – this is terrible news for websites built around fishing for search traffic, since the user won’t need to click through nearly as often to find an answer.

Search in the Gemini era | Google I/O 2024

In other cases, Gemini will answer a complex question, but provide a nicely-formatted bunch of links for options or further information; it really depends what the user is looking for.

The changes don’t stop there; Google is really shifting Search into prime position as your personal AI assistant or agent, which can not only answer questions, but can use multi-step reasoning to assist with planning. Eventually, it’ll start executing things on your behalf too. Reid demonstrated its ability to create and tailor a family meal plan, for example, and output a shopping list of ingredients. “Looking ahead,” she mused, “you could imagine asking Google to add everything to your preferred shopping cart. Then, we’re really cooking!”

Searching with Video, Images and Voice

Gemini is a multimodal AI; it’s been trained not only on text, but on images, videos and audio as well, and has a remarkable ability to understand what it sees and hears. So Google Search is getting eyes and ears. You’ll be able to hold up your phone, give Google access to your camera and microphone, and ask it questions about the world around you.

Thus, it becomes a zero-effort problem-solver. “Hey, what’s wrong with this thing,” you’ll ask, and Gemini won’t need you to tell it what you’re asking about; it’ll figure out model numbers, brands and common issues, it’ll research them on the Web so you don’t have to, it’ll attempt to diagnose issues through its vision, and will then put together a troubleshooting list for you.

And it’s all heading toward the same place: Google, like OpenAI, wants to create the AI agents that’ll eventually ride shotgun with you through your entire life, giving you access to a perfect videographic memory of everything you’ve ever done, and every conversation you’ve had, as well as all the vaunted superpowers of generative AI, constantly working to speed you toward your personal goals. A utopia of productivity, a nightmare for privacy – but we’ll use it, because it’ll be indispensable.

As with OpenAI’s GPT-4o release yesterday – which was clearly deliberately timed to poop Google’s party – the new Gemini AI is getting a much more human, much more responsive voice agent. It’s currently known as Project Astra:

Project Astra: Our vision for the future of AI assistants

You could think of this, perhaps, as Google Search breaking through into the real world; the presenter literally asks Google where she’d left her glasses before switching from phone camera to smart glasses. But it’s not Google Search. It’s the Gemini AI, with real-time access to Google Search.

Google has shot itself repeatedly in the foot in the rollout of its AI offerings, and OpenAI has appeared to have the better of Google nearly every time the two have gone head to head. There’s no doubt, GPT-4o’s assistant is quicker, more expressive, flirtier, and gives me much more of a ‘we’re living in the future’ feeling than today’s Google demo.

But I’m wondering how much that’s going to matter. Along with its Search updates, Google also released a flurry of other AI products at I/O, including Veo, a generative video system that looks like it’s not far behind OpenAI’s Sora:

Filmmaking with Donald Glover and his creative studio, Gilga | Veo

It may turn out that it doesn’t matter who gets there first with these next-gen multimodal AIs, because it seems like any given AI company might be able to achieve what OpenAI does, given sufficient energy, compute, and data. What might matter more is market share. Google has a colossal user base ready to go, through its web search dominance and Android mobile operating system – which is used on a daily basis by about 3.5 billion people.

So it’s understandable that Google might shake its own very foundations by substantially changing its flagship Search product; AIs, not hand-coded search algorithms, will be the gatekeepers of knowledge in the next phase of the Information Age.

Apparently, Bing’s doing it too.

Check out the Google I/O keynote address in all its glory below.

Google Keynote (Google I/O ‘24)

Source: Google