Securing the Next Frontier of AI Innovation

AI will soon be deeply embedded into the foundation of business – and we can’t afford to make security a retrofit.

By Kevin Skapinetz | Vice President, Security Strategy, IBM

May 06, 2024

Generative AI holds enormous potential to transform business operations, and our daily lives. But ultimately, AI’s potential hinges on trust. If trust in AI is compromised, it could throttle investment, adoption, and our ability to rely on these systems for their intended purpose – turning AI’s promise into liability and sunk costs.

Just as the industry worked to secureservers, networks, applications and cloud in the past: AI is the next big platform we need to secure. But this time, we can’t afford to fail. Because generative AI will soon become deeply embedded into the very fabric of business – the foundation on which critical decisions, operations and customer interactions are built. If we don’t weave security into that foundation from the outset, ongoing breaches of trust can quickly erode it.

This is why we must embed security into our AI models and the application stack that it’s revolutionizing today – while we’re still at the forefront of its adoption curve. In other words, security must be part of the ground crew as companies move forward with AI planning and investments.

While many view security as a roadblock on this journey, we believe security can actually help create the trust needed to accelerate AI use-cases more quickly from proof-of-concept into production.

To help make this a reality, IBM is publishing new data to provide a better understanding of current C-Suite perspectives when it comes to securing generative AI. Additionally, we’ve published a framework for securing generative AI to help companies navigate and prioritize these security initiatives. IBM also offers one of the most comprehensive data, AI and security portfolios in the industry – designed to help make it easier for companies to embed security and governance into the foundation of their AI-powered business.

Global study reveals C-Suite perspective on securing generative AI

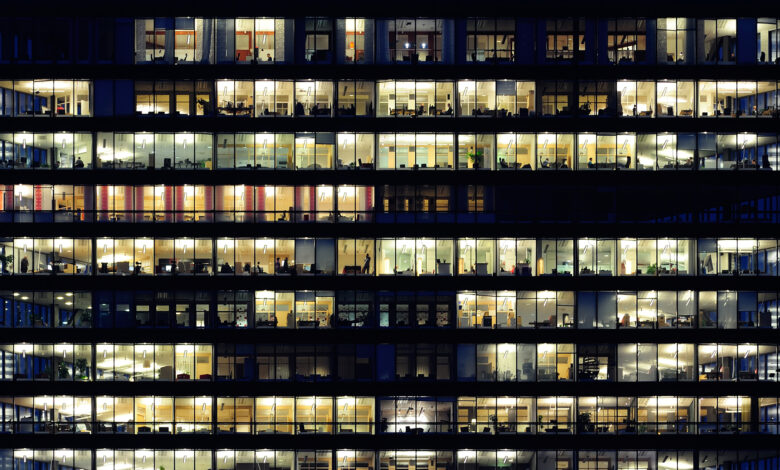

As most AI projects are being driven from business and operation teams, it’s essential for security leaders to enter these conversations from a risk-driven perspective, with a strong understanding of business priorities.

A new study [1] from the IBM Institute for Business Value sheds light on C-Suite perspectives and priorities when it comes to generative AI risk and adoption, revealing an alarming disconnect between security concerns and demands to innovate quickly. While 82% of respondents acknowledged that secure and trustworthy AI is essential to the success of their business – 69% of those surveyed still say that innovation precedes security.

The study also identified that one of the biggest concerns for business leaders is they don’t know what they don’t know, with generative AI representing a novel field of risk and opportunity:

- Most C-Suite respondents were concerned about the new and unpredictable security risks as a result of generative AI: 51% of respondents were concerned with unpredictable risks and new security vulnerabilities arising, and 47% were concerned with new attacks targeting AI.

- Nearly half (47%) expressed uncertainty about where and how much to invest when it comes to GenAI and their business operations.

While business leaders acknowledge the importance of secure and trustworthy AI, the unknown nature of this new frontier may be preventing them from taking the steps needed to secure AI today. In fact, less than a quarter (24%) of respondents said they are including security as part of their current generative AI projects.

A framework can help companies navigate and prioritize AI security investments based on risk

To help organizations better understand and navigate these challenges, some of the leading experts across IBM have created a Framework for Securing Generative AI. This framework is designed to help companies understand the threats they are most likely to encounter when it comes to generative AI adoption, and to prioritize defenses accordingly. It can also help companies take a comprehensive view of securing AI, with three core pillars aligned to AI-specific risks:

- Secure the Data: AI poses a heightened data security risk, with highly sensitive data being centralized and accessed for training. Companies must focus on securing the underlying AI training data by protecting it from sensitive data theft, manipulation, and compliance violations.

- Secure the Model Development: With AI-enabled apps being built in a brand-new way, the risk of introducing new vulnerabilities increases. Prioritize securing AI model development by scanning for vulnerabilities in the pipeline, hardening integrations, and enforcing policies and access.

- Secure the Usage: Attackers will seek to use model inferencing to hijack or manipulate behavior of AI models. Companies must secure the usage of AI models by detecting data or prompt leakage, and alerting on evasion, poisoning, extraction, or inference attacks.

Beyond these three pillars, security leaders must remember that one of the first lines of defense is a secured infrastructure – and should optimize security across the broader environment hosting AI systems.

With emerging regulations and public scrutiny on responsible AI, organizations must also have robust AI governance in place to manage accuracy, privacy, transparency and explainability. In this landscape, organizations should seek to merge security and data governance programs more closely, in order to drive consistent policies, enforcement and reporting between these two historically siloed disciplines.

IBM helps clients embed security into their AI deployments

IBM has decades of leadership in both security as well as AI for business, allowing us to offer clients both the expertise and a robust portfolio of technologies and services to help them secure their AI journeys. IBM is committed to offerings that build trust and leverage an open architecture – making it easier to connect across security, risk and governance programs .

- IBM Consulting Cybersecurity Services can help organizations navigate their AI transformations with security at the forefront, from planning and development through ongoing monitoring and governance.

- IBM is launching new IBM X-Force Red Testing Services for AI, designed to test the securityof generative AI applications, MLSecOps pipelines, and AI models from an attacker-driven perspective. Delivered by a specialized team with deep expertise across data science, AI red teaming and application pen testing, this approach helps pinpoint and address the weaknesses that attackers are most likely to exploit in the real world today.

- IBM Consulting’s new Active Governance Framework helps organizations gain a continuous and comprehensive view of cyber risk, powered by AI and automation. In the AI era, building robust AI governance models and bringing them into cybersecurity programs is essential to help manage enterprise-wide risks, particularly given evolving policies and regulations.

- IBM Software helps clients find, protect and secure access to sensitive data used in AI deployments, while also securing access to these systems. Two crucial components include:

- Data Security: Our IBM Security Guardium portfolio helps protects data wherever it resides, including data security posture management designed to help companies find sensitive “shadow” data and manage its movement across applications. Looking forward, we’re working to extend these capabilities to help companies find and protect AI models being used within their business, and to gain an understanding of risk to help speed adoption.

- Identity and Access: With identity-based attacks serving as the top entry point for attackers last year,[2] this risk will become even greater as AI brings together companies most critical data and IP: making it crucial to protect access to these systems. IBM Security Verify provides a comprehensive set of identity and access management capabilities – built with an open, vendor agnostic approach that helps companies create a more consistent “identity fabric” for managing identity risks across their broader legacy and cloud-based technology stack.

IBM also offers companies our watsonx portfolio –for companies to build custom AI applications, manage data sources, and accelerate AI workflows: all from a single platform. Specifically, watsonx.governance is available for companies to govern their generative AI models built from any vendor, helping evaluate and monitor AI model behavior including drift, bias and quality – and help companies with their obligations as they comply with emerging regulations and policies worldwide.

With AI poised to transform the business landscape as we know it, companies cannot afford to make security a retrofit: it must be built in from the ground level, starting today. With AI’s potential hinging on trust, security may ultimately be the secret ingredient that determines which generative AI projects turn into success stories, and which turn into sunk costs.

Read the Report: Securing Generative AI: What Matters Now

Check out the Framework for Securing Generative AI

Statements regarding IBM’s future direction and intent are subject to change or withdrawal without notice, and represent goals and objectives only.