Senate AI Roadmap Draws Criticism for Its Simplicity

WASHINGTON, May 17, 2024 – Industry leaders are criticizing a Senate working group’s newly released artificial intelligence roadmap for not adequately establishing oversight and accountability for the fledgling technology.

The report released on Wednesday is built upon a series of AI Insight Forums conducted over the last year, highlighting potential funding and legislation that can best utilize AI and stem emerging challenges. The report comprises recommendations provided by industry professionals for the consideration of policy makers.

Some recommendations include testing AI systems for harmful bias before being rolled out in high impact areas, such as health services or the financial sector. The report also suggests mandating AI watermarks to counter the effect of misinformation spread through deepfakes and chatbots, as well as providing voter education to build internet literacy. Furthermore, the report encourages industries to adopt employee training in order to utilize AI in the private sector.

Additionally, the report recommends legislators adopt a national standard for data privacy protection, as concerns mount over the vast quantities of data required to train AI models. Notably, the report prescribes up to $32 billion a year for AI research and development, partially to maintain a competitive edge over foreign adversaries.

Chris Lewis, CEO of the advocacy group Public Knowledge, released a statement praising some aspects of the report, particularly its inclusion of a privacy law and its tackling of deepfake harm. However, Lewis, an advocate of AI regulation, expressed concern over its limited scope, particularly its omission of three key issues.

Firstly, the roadmap does not outline provisions to ensure competition in the AI marketplace, specifically how to prevent the creation of monopolies by early innovators with access to high computing power and Big Data, according to Lewis.

Secondly, the roadmap also omits protection for Fair Use under copyright law, which Lewis says is essential to protect online creativity. Finally, Lewis criticized the roadmap for not presenting a clear vision for sustainable accountability or oversight over AI. Public Knowledge has previously advocated for an “expert digital regulator” to oversee the fast evolving landscape.

Lewis echoed the reports focus on bias and discrimination existing in poorly made and poorly operated AI, the spread of disinformation and the potential for AI to upend industry and challenge labor, listing them as among the most important issues.

Lewis said education regarding the field of AI is key for policy makers to get regulations right.

“A baseline of knowledge is critical to avoid policy mistakes about the current technology, let alone where it will be in future years.” Lewis said.

Despite reservations, Lewis said Public Knowledge looks forward to corresponding with senators to address these key issues. Lewis also called on the public to stay vigilant as legislation passes and demand their concerns be taken into consideration alongside industry lobbyists.

Maria Ghazal, president of the Healthcare Leadership Council, commended the report as a groundbreaking step toward legislation. Ghazal especially praised the report for prioritizing patients in its analysis of AI health integration. However many awareness groups are critical of the framework, particularly for its lack of safeguards to protect minority communities.

Cody Venske, senior policy counsel at the American Civil Liberties Union, said the framework gives insufficient safeguards for protecting civil rights.

“Enforcement of existing civil rights laws should be reinforced with meaningful protections,” Venske said. Venske suggested implementing notices for individuals being screened with AI, rigorous algorithm audits to root out bias, and providing human alternatives for affected individuals.

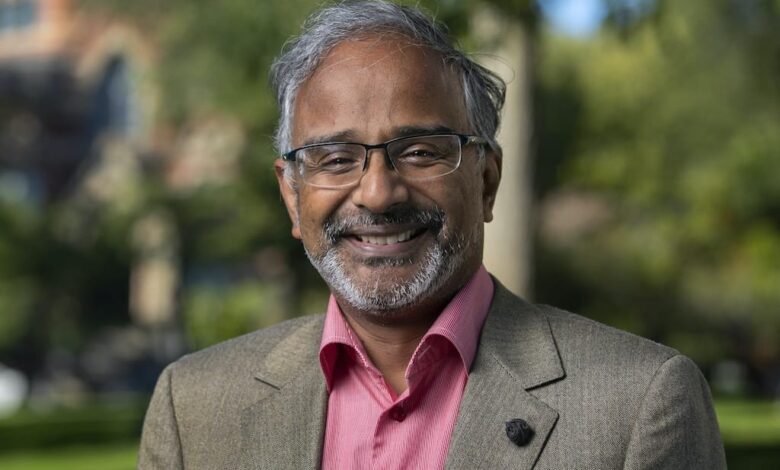

Suresh Venkatasubramanian, who drafted an AI Bill of Rights while advisor to the White House Office of Science and Technology Policy, also said the report lacked any substantial guardrails to protect civil liberties. Venkatasubramanian said the report only gives lip service to AI accountability and bias mitigation.

“The new Senate “roadmap” on AI carries a strong, “I had to turn in a final report to get a passing grade so I won’t think about the issues and will just copy old stuff and recycle it” vibes,” Venkatasubramanian mocked on his X account.