Slack Migrates to Cell-Based Architecture on AWS to Mitigate Gray Failures

Slack migrated most of the critical user-facing services from a monolithic to a cell-based architecture over the last 1.5 years. The move was triggered by the impact of networking outages affecting a single availability zone, causing user-impacting service degradation. The new architecture allows incrementally draining all the traffic away from the affected availability zone within 5 minutes.

Slack has a global infrastructure footprint, but its core platform is hosted in the US East Coast region (us-east-1). The company uses availability zones (AZs) for failure isolation but has still, on occasion, experienced gray failures, where intermittent issues make determining component availability challenging in the distributed environment, impacting end users. One such incident happened on June 30, 2021, when the networking equipment experienced intermittent failures in one of the AZs.

Cooper Bethea, senior staff engineer at Slack, explains why failure detection in distributed systems can be problematic:

As it turns out, detecting failure in distributed systems is a hard problem. A single Slack API request from a user (for example, loading messages in a channel) may fan out into hundreds of RPCs to service backends, each of which must complete to return a correct response to the user. Our service frontends are continuously attempting to detect and exclude failed backends, but we’ve got to record some failures before we can exclude a failed server!

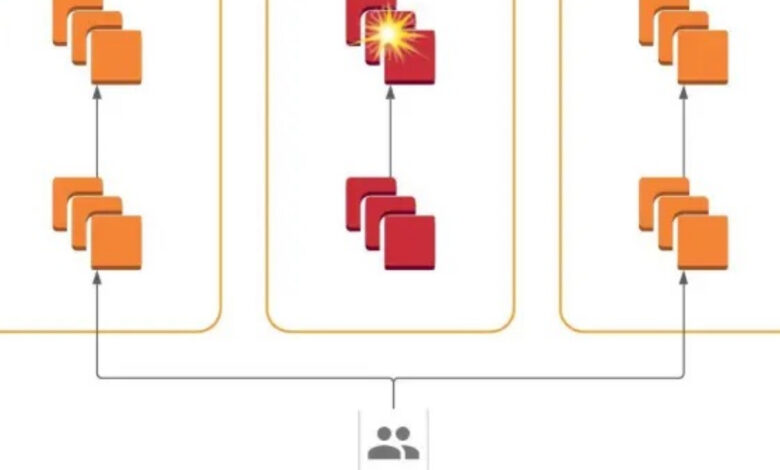

The team at Slack decided to adopt a cell-based approach where each AZ contains a completely siloed backend deployment with components constrained to a single AZ. The traffic is routed into AZ-scoped cells by a layer using Envoy/xDS. The siloed approach was somewhat motivated by the heterogeneous nature of Slack’s platform, where many language stacks and service discovery interfaces were used, so introducing complex routing logic between the backend services would be very cumbersome.

Siloed Architecture With Traffic Routed Away From Affected Cell (Source: Slack Engineering Blog)

With the new architecture, the company can quickly shift the traffic away from the cell that is experiencing problems as configuration changes are propagated within seconds. Traffic can be shifted gradually (with 1% granularity) and gracefully (all in-flight requests get completed in the cell being drained).

Slack’s path follows the guidance laid out by AWS, further explored in the Cloud-Native Architecture Series, where authors present different tools and techniques for improving the scalability and resiliency of cloud workloads, utilizing container-based compute services. AWS architects argue for strong fault-isolation boundaries in cloud architectures to prepare for black swan events and minimize the impact of unexpected failures.

Cell-based Architecture With EKS (Source: AWS Architecture Blog)

Slack’s adoption of cell-based architecture has generated substantial discussion in the community. Among numerous comments (most of which were somewhat off-topic) in the busy HN thread, the user tedd4u pointed out that cell-based architectures have been around for at least 10 years. Another user named ignoramous (self-proclaimed ex-AWS employee) highlighted that the guidance on cell-based architecture is based on Amazon’s and AWS’ own efforts to deliver resiliency in the cloud.

Recently, Roblox also shared its journey towards cell-based architecture and future plans to improve the resiliency of its platform further.