The Linux Foundation and tech giants partner on open-source generative AI enterprise tools

Intel CEO Pat Gelsinger focused his sales pitch for Gaudi 3 on enterprise customers, telling them a “third phase” of AI will mean automating complex enterprise tasks.

Intel

The Linux Foundation and a host of major tech companies are teaming up to build generative AI platforms for enterprise users.

Intel, Red Hat, VMware, Anyscale, Cloudera, KX, MariaDB Foundation, Qdrant, SAS, and several other companies are partnering on the Open Platform for Enterprise AI (OPEA), a Linux Foundation initiative to develop open-source AI solutions for companies worldwide. While the partners stopped short of saying what exactly they will develop, they promised “the development of open, multi-provider, robust, and composable GenAI systems.”

The corporate world is abuzz over AI and its potential. While some companies are building their own AI solutions, others are reliant upon third-party providers. Some of the AI services they need are unique to their businesses, but in many cases, AI services that can predict outcomes, autocomplete spreadsheet formulas, and optimize worker time can be used across industries.

Also: AI business is booming: ChatGPT Enterprise now boasts 600,000+ users

OPEA tries to address the latter use case. Because the companies have committed to building open-source AI products, they would conceivably be able to jump between the various products without compatibility or cross-functional operation issues.

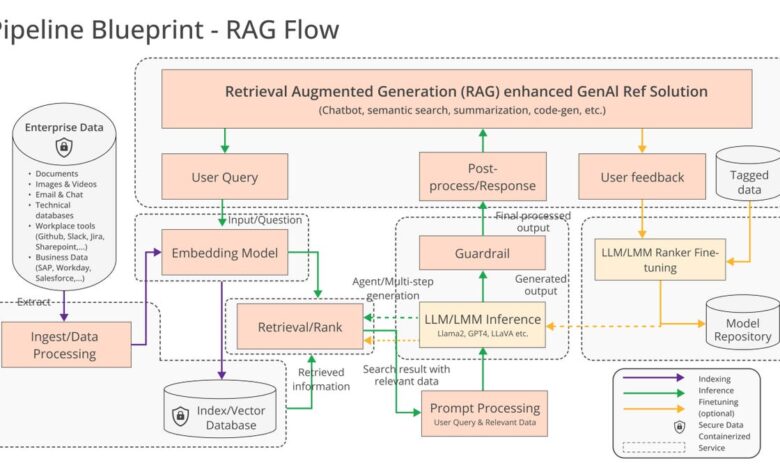

The companies also hope to address the increasing adoption of retrieval-augmented generation (RAG) solutions, they said. RAG refers to an AI model’s ability to access external data to supplement its understanding of user queries and deliver better results.

A view of how OPEA solutions could work, with help from RAG.

OPEA

For example, if doctors use an AI model to enhance their practice, externally sourced medical journals could make that AI model — and their outcomes — even better. The problem, however, is that RAG pipelines haven’t been standardized, creating issues for companies wanting to deploy new AI platforms.

“OPEA intends to address this issue by collaborating with the industry to standardize components, including frameworks, architecture blueprints and reference solutions that showcase performance, interoperability, trustworthiness and enterprise-grade readiness,” the companies said in a statement.

Although these companies have committed to building cross-compatible AI tools through OPEA, they’re still competitors that have a vested interest in generating revenue from their own products. While enterprises could ultimately benefit from open-source AI tools, the companies will still need to play nicely together if the want to achieve OPEA’s goals.