The US has not released crocodiles into the Rio Grande and the Louvre is not on fire: How to detect if an image has been generated with AI | Technology

The United States has not released “8,000 crocodiles into the Rio Grande” to prevent migrants from crossing, nor has the pyramid of the Louvre Museum in Paris been set on fire. Nor has a giant octopus been found on a beach, and the whereabouts of Bigfoot and the Loch Ness Monster remain a mystery. All this misinformation arose from images generated with artificial intelligence. While tech companies such as Google, Meta and OpenAI are trying to detect AI-created content and create tamper-resistant watermarks, users face a difficult challenge: that of discerning whether images circulating on social networks are real or not. While it is sometimes possible to determine with the naked eye, there are tools that can help in more complex cases.

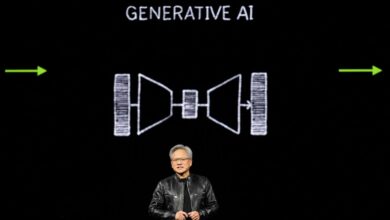

Generating images with artificial intelligence is becoming easier and easier. “Today anyone, without any technical skills, can type a sentence into a platform like DALL-E, Firefly, Midjourney or another message-based model and create a hyper-realistic piece of digital content,” says Jeffrey McGregor, CEO of Truepic, one of the founding companies of the Coalition for Content Provenance and Authenticity (C2PA). Some services are free and others don’t even require an account.

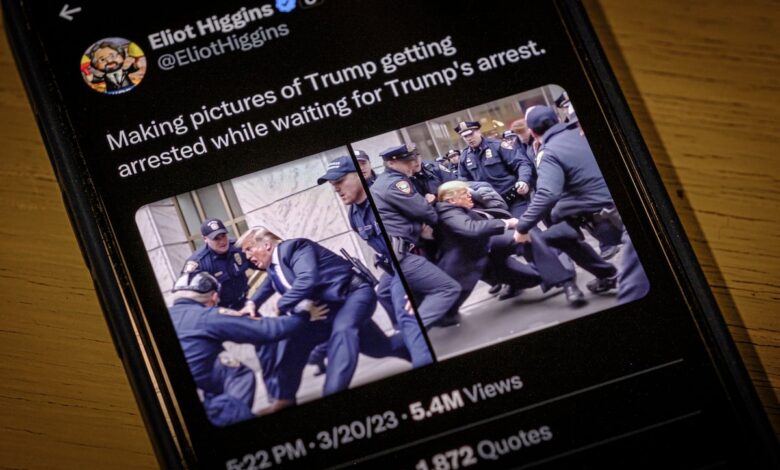

“AI can create incredibly realistic images of people or events that never took place,” says Neal Krawetz, founder of Hacker Factor Solutions and FotoForensics, a tool to verify whether an image may have been manipulated. In 2023, for example, an image of Pope Francis wearing a Balenciaga feather coat and others of former U.S. president Donald Trump fleeing from the police to avoid arrest went viral. Krawetz stresses that such images can be used to influence opinions, damage someone’s reputation, create misinformation, and provide false context around real situations. In this way, “they can erode trust in otherwise reliable sources.”

AI tools can also be used to falsely depict people in sexually compromising positions, committing crimes, or in the company of criminals, as V.S. Subrahmanian, a professor of computer science at Northwestern University, points out. Last year dozens of minors in Extremadura, Spain, reported that fake nude photos of themselves created by AI were circulating. “These images can be used to extort, blackmail, and destroy the lives of leaders and ordinary citizens,” Subrahmanian says.

AI-generated images can also pose a serious threat to national security: “They can be used to divide a country’s population by pitting one ethnic, religious, or racial group against another, which could lead in the long run to riots and political upheaval.” Josep Albors, director of research and awareness at Spanish IT security company ESET, explains that many hoaxes rely on these types of images to generate controversy and stir up reactions. “In an election year in many countries, this can tip the balance to one side or the other,” he says.

Tips for detecting AI-generated images

Experts like Albors advise being suspicious of everything in the online world. “We have to learn to live with the fact that this exists, that AI generates parallel realities, and just keep that in mind when we receive content or see something on social networks,” says Tamoa Calzadilla, editor-in-chief of Factchequeado, an initiative by Maldita.es and Chequeado to combat Spanish-language disinformation in the United States. Knowing that an image may have been generated by AI “is a big step toward not being misled and sharing disinformation.”

Some images of this type are easy to detect just by looking at details of the hands, eyes, or faces of the people in them, according to Jonathan Pulla, a fact-checker at Factchequeado. This was the case with an AI-created image of U.S. President Joe Biden wearing a military uniform: “You can tell by the different skin tones on his face, the phone wires that go nowhere, and the disproportionately sized forehead of one of the soldiers in the image.”

He also gives as an example manipulated images of actor Tom Hanks wearing T-shirts bearing slogans for or against Donald Trump’s re-election. “That [Hanks] is in the same pose and only the text on the T-shirt changes, that his skin is very smooth and his nose irregular, indicate that they may have been created with digital tools, such as artificial intelligence,” Maldita.es verifiers say about these images, which went viral in early 2024.

Many AI-generated images can be identified at a glance by a trained user, especially those created with free tools, according to Albors: “If we look at the colors, we will often notice that they are not natural, that everything looks like plasticine and that some components of these images even blend together, such as the hair on the face, or various garments with each other.” If the image is of a person, the expert also suggests looking to see if there is anything anomalous about their limbs.

Although the first-generation imagers made “simple mistakes,” they have improved significantly over time. Subrahmanian points out that in the past they often depicted people with six fingers and unnatural shadows. They also rendered street and store signs incorrectly, making “absurd spelling mistakes.” “Today, technology has largely overcome these deficiencies,” he says.

Tools to identify fake images

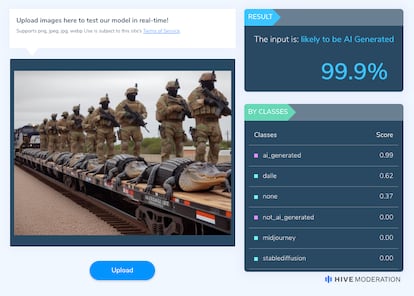

The problem is that now “an AI-generated image is already virtually indistinguishable from a real one for many people,” as Albors points out. There are tools that can help identify such images, such as AI or NOT, Sensity, FotoForensics, or Hive Moderation. OpenAI is also creating its own tool to detect content created by its image generator, DALL-E, as announced on May 7 in a press release. In the area of audio deepfakes, the VerificAudio program helps identify synthetic voices.

Such tools, according to Pulla, are useful as a complement to observation, “as sometimes they are not very accurate or do not detect some AI-generated images.” Factcheckers often turn to Hive Moderation and FotoForensics. Both can be used for free and work in a similar way: the user uploads a photo and requests that it be examined. While Hive Moderation provides a percentage of how likely the content is to have been generated by AI, the results of FotoForensics are more difficult to interpret for someone without prior knowledge.

When uploading the image of the crocodiles that have supposedly been sent by the U.S. to the Rio Grande, the Pope in the Balenciaga coat, or one of a satanic McDonald’s happy meal, Hive Moderation gives a 99.9% chance that they were AI-generated. However, with the manipulated photo of Kate Middleton, Princess of Wales, it indicates that the probability is 0%. In this case, Pulla found Fotoforensics and Invid — which “can show certain altered details in an image that are not visible” — useful.

But why is it so difficult to determine if an image has been generated with artificial intelligence? The main limitation of such tools, according to Subrahmanian, is that they lack context and prior knowledge. “Humans use their common sense all the time to separate real claims from false ones, but machine learning algorithms for deepfake image detection have made little progress in this regard,” he says. The expert believes that it will become increasingly unlikely to know with 100% certainty whether a photo is real or AI-generated.

Even if a detection tool were accurate 99% of the time in determining whether content was AI-generated or not, “that 1% gap on the scale of the Internet is huge.” In one year alone, AI generated 15 billion images, says a report in Everypixel Journal, which notes that “AI has already created as many images as photographers have taken in 150 years.” “When all it takes is one convincingly fabricated image to degrade confidence, 150 million undetected images is a pretty disturbing number,” McGregor says.

Aside from manipulated pixels, McGregor stresses that it is also nearly impossible to identify whether an image’s metadata — the time, date and location — is accurate after its creation. The expert believes that “the provenance of digital content, which uses cryptography to mark images, will be the best way for users to identify in the future which images are original and have not been modified.” His company, Truepic, claims to have launched the world’s first transparent deepfake with these markings, with information about their origin.

Until these systems are widely implemented, it is essential that users adopt a critical stance. A guide produced by Factchequeado, with support from the Reynolds Journalism Institute, lists 17 tools to combat disinformation. Among them are several to verify photos and videos. The key, according to Calzadilla, is to be aware that none of them is infallible nor 100% reliable. Therefore, to detect whether an image has been generated with AI, no single tool is enough: “Verification is carried out through various techniques: observation, the use of tools, and classic journalism techniques. That is, contacting the original source, checking their social networks, and verifying whether the information attributed to them is true.”

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition