Ultimate Guide to AI Deepfake Technology

A deepfake is a type of synthetic media where the likeness of someone in an existing image or video is replaced with someone else’s likeness using artificial intelligence. This technology utilizes sophisticated AI algorithms to create or manipulate audio and video content with a high degree of realism.

Deepfake technology represents one of the most intriguing – and controversial – advancements in artificial intelligence today. As deepfakes become more accessible and their quality continues to improve, they pose significant challenges and opportunities across various sectors, including media, entertainment, and security.

Understanding Deepfakes

Creators of deepfakes use software that leverages advanced artificial intelligence and deep learning techniques, generative adversarial networks (GANs) in particular, to create or alter video and audio content convincingly.

If you’ve been spending a considerable amount of time online over the past few years, you’ll have noticed that this technology started becoming popular in the contexts of celebrity videos and politics. But it’s now expanded its reach across various sectors, including entertainment, politics, and education.

Deepfakes are created by training AI systems with large datasets of images, videos, or audio clips to recognize patterns in facial movements, voice modulation, and other physical attributes. The AI then applies this learned data to new content, superimposing one person’s likeness onto another person’s with striking realism. While the technology showcases remarkable advancements in AI, it also raises significant ethical and security concerns due to its potential for misuse, such as creating false representations of individuals saying or doing things they never did.

Significance of Deepfakes

Deepfake technology has far-reaching implications across various domains, from politics and security to entertainment. This technology creates serious risks because it has the power to fabricate highly realistic videos and audio recordings that misrepresent people in all walks of life.

Deepfakes have raised alarms in the political sphere as they can be used to create misleading or false information, potentially influencing elections and public opinion. They threaten national security by enabling the spread of misinformation and potentially destabilizing political processes.

Similarly, during election seasons, deepfakes have been identified as highly effective tools that could impact voter behavior by disseminating fraudulent misinformation, especially as the quality of deepfakes improves over time.

In the context of media and public perception, there’s no denying that deepfakes can be used to enhance storytelling and create engaging content. However, they also risk undermining public trust in the media.

For example, unauthorized use of celebrity likenesses or manipulation of news footage can lead to confusion and mistrust among the public. This opens up an array of legal and ethical problems. Deepfakes challenge the concept of truth in digital media and raise questions about consent and privacy, especially when individuals’ likenesses are used without their permission.

This doesn’t mean that deepfakes can do no good. Risks aside, deepfakes hold potential for positive applications. They can be used in filmmaking, for educational purposes, and even in healthcare, such as creating personalized therapy sessions with avatars.

To see a list of the leading generative AI apps, read our guide: Top 20 Generative AI Tools and Apps 2024

History and Development of Deepfake Technology

This technology grew out of basic video manipulation techniques, which have progressed to the sophisticated use of AI-driven video methods today.

Initially, simple video editing software was capable of altering and stitching images and clips to create the illusion of altered reality. However, the term “deepfake” became widely recognized around 2017 when a Reddit user, known as “Deepfakes,” began sharing hyper-realistic fake videos of celebrities. This early use of deep learning technology in manipulating videos garnered significant attention and concern.

As AI technology advanced, the development of deepfake technology saw significant milestones, particularly with the adoption of generative adversarial networks (GANs). Introduced by Ian Goodfellow and his colleagues in 2014, GANs represent a breakthrough in the field, allowing for more sophisticated and convincing deepfakes. This technology employs two neural networks, one generating the fake images while the other tries to detect their authenticity, continuously improving the output’s realism.

Today, deepfake technology is built on even more complex neural networks that require enormous amounts of data and computing power to create their highly convincing fake content. This rapid advancement poses both opportunities and challenges, which underscores the need for continuous research in detection technologies and ethical frameworks to manage the impact of deepfakes on society.

How Deepfakes Work

Deepfake technologies operate on the backbone of advanced AI and machine learning principles, primarily through the use of generative adversarial networks (GANs).

Generative Adversarial Networks (GANs)

GANs consist of two machine learning models: the generator and the discriminator. The generator creates images or videos that look real, while the discriminator evaluates their authenticity against a set of real images or videos, setting up an adversarial relationship between the two models.

This adversarial process continues until the discriminator can no longer distinguish the generated images from the real ones, resulting in highly convincing deepfakes.

GANs learn the nuances of human expressions and movements. They allow for the realistic manipulation of video content by synthesizing new content that mimics the original data’s style and detail. This makes GANs incredibly powerful at generating deceptive media that continues to be more and more indistinguishable from authentic content.

Additional AI models

Besides GANs, other AI models like autoencoders and neural network architectures are also employed to enhance the realism of deepfakes.

These models assist in different aspects of the deepfake creation process, such as improving resolution, refining facial expressions, and ensuring lip synchronization in video deepfakes. All these come together to significantly contribute to the sophistication of deepfakes, allowing them to be used in various ways, from harmless entertainment to potential tools for misinformation.

Current Technologies Required to Create a Deepfake

Creating deepfakes involves a combination of advanced technologies. Here are some key technologies currently used:

- Generative adversarial networks (GANs): The cornerstone of deepfake technology, GANs use two neural networks that work against each other to produce increasingly realistic images or videos.

- Autoencoders: These neural networks are used for learning efficient data coding in an unsupervised manner. For deepfakes, they help in compressing and decompressing the images to maintain quality while manipulating them.

- Machine learning algorithms: Various algorithms analyze thousands of images or video frames to understand and replicate patterns of human gestures and facial expressions.

- Facial recognition and tracking: This technology is crucial for identifying and tracking facial features to seamlessly swap faces or alter expressions in videos.

- Voice synthesis and audio processing: Advanced audio AI tools are employed to clone voices and sync them accurately with video to produce realistic audio that matches the video content.

- Video editing software: Although not always AI-driven, sophisticated video editing tools are often used alongside AI technologies to refine the outputs and make adjustments that enhance realism.

Such technologies are often integrated into platforms and applications to make it easier to create deepfakes. This approach also makes them accessible not just to professionals in visual effects but increasingly to the general public. Some examples of these platforms include DeepFaceLab, FaceSwap, and Reface.

For more information about generative AI providers, read our in-depth guide: Generative AI Companies: Top 20 Leaders

How Deepfakes Differs from Media Editing

Deepfake technology and traditional editing software have one thing in common: They both manipulate digital media. But the difference lies in how they accomplish this task.

Editing Software

Traditional video editing software, such as Adobe Premiere Pro or Final Cut Pro, allows users to cut, splice, overlay, and adjust videos manually. They rely on human input to make changes to media files, which can include anything from adjusting color and lighting to inserting new visual elements.

While powerful, these applications need great skill and effort to create convincing manipulations, let alone when altering someone’s appearance or actions in a video.

Deepfake Technology

In contrast, deepfake technology automates the manipulation process using artificial intelligence. Deepfakes are designed to create photorealistic alterations automatically by training AI models on a dataset of images or videos.

This training enables the AI to generate new content where faces, voices, and even body movements can be swapped or entirely fabricated with high precision, without the need for frame-by-frame manual adjustments.

Impact and Implications

The takeaway is that deepfake technology enables photorealism with minimal human intervention, which standard editing software can’t guarantee even with an expert editor.

Deepfakes can be created faster and with potentially less detectable signs of manipulation, raising concerns about their use for misinformation, fraud, or harassment. Editing software, while also capable of significant manipulation, generally results in changes that can be more easily spotted and usually requires more time and technical skill to match similar levels of realism.

3 Positive Deepfake Examples

Deepfake technology, despite its potential for misuse, has also been applied in positive and innovative ways across various fields.

Filmmaking and Entertainment

One of the most intuitive and sensible examples of deepfake technology use has been used to enhance visual effects in films and television. For instance, it was used to recreate performances or to de-age actors in movies such as “The Irishman,” where Robert De Niro’s younger version was realistically portrayed using deepfake technology.

DeepFaceLab has been commonly used by professionals and amateurs to achieve these high-quality visual effects in filmmaking.

Education and Training

Deepfakes can be used to create interactive educational content, making historical figures or fictional characters come to life. This application can provide a more engaging learning experience in settings ranging from classroom teachings to professional training scenarios.

An example of a platform that can be used in this manner is Synthesia, an AI video generation platform that educators use to create custom educational videos featuring deepfake avatars.

Healthcare and Therapy

In the medical field, deepfake technology helps in creating personalized therapy sessions where therapists can be deepfaked to resemble someone the patient trusts with the goal of making therapy more effective. It can also be used to design molecules that can potentially treat diseases. A platform like Pharma.ai takes such an approach.

3 Malicious Deepfake Examples

On the flip side, deepfakes have also been used for malicious purposes. Some of the most devastating ones include:

Political Misinformation

Deepfakes have been utilized to create fake videos of politicians saying or doing things they never did. This spreads misinformation and quite easily manipulates public opinion.

For instance, a deepfake video could portray a political figure making inflammatory statements or endorsing policies they never supported. This could potentially sway elections and possibly cause public unrest.

Financial Fraud

In the financial sector, deepfakes can and have been used to impersonate CEOs and other high-profile executives in videos to manipulate stock prices or commit fraud. Of course these videos have to be incredibly convincing to create significant financial losses for companies and investors.

Image Abuse

Celebrities and public figures are often targets of deepfakes, where their likenesses are used without consent to create inappropriate or harmful content. This not only invades their privacy but also damages their public image and can lead to legal battles. Unauthorized deepfake videos of celebrities involved in fictitious or compromising situations spread across social media and other platforms and have been a popular – but controversial – use of this technology.

Deepfake Ethical and Social Implications

Deepfake technology significantly impacts truth and trust in digital content, challenging our ability to discern real from manipulated media.

Truth and Trust

The highly realistic and convincing fake media from deepfakes erodes public trust in digital content – even as deepfakes continue to grow in sophistication.

The distinction between real and fake was already almost indistinguishable to many viewers. With greater sophistication, it’s going to be even more difficult to spot the fakes, which will lead to more general skepticism and mistrust of all media. This complicates the public’s ability to make informed decisions and undermines democratic processes through the spread of disinformation.

Liar’s Dividend

The “Liar’s Dividend” is a troubling effect of deepfakes, where the very existence of this technology can be used as a defense by individuals to deny real, incriminating evidence against them.

By claiming that genuine footage is a deepfake, wrongdoers can escape accountability, thus benefiting liars and eroding trust even further. This has introduced new challenges for legal systems and society, as it complicates the verification process of video and audio evidence, impacting everything from political accountability to legal proceedings.

Public Discourse

Deepfakes can fabricate the statements or actions of public figures to breed misinformation and confusion. This manipulation not only distorts public perception but also fans flames of polarization and social unrest.

As a consequence, the growth of deepfake technology presents an ethical dilemma that demands urgent discussions on regulations along with the development of robust detection technologies. Otherwise, the integrity of information will be eroded and individuals will increasingly be subject to defamation and unwarranted scandals.

Legal and Regulatory Perspectives on Deepfakes

Current Laws and Frameworks

Various countries are navigating the complex challenges posed by deepfakes by implementing specific laws aimed at their misuse.

For instance, in the United States, states like California have enacted laws that make it illegal to distribute non-consensual deepfake pornography. Additionally, at least 14 states have introduced legislation to fight the threats that deepfakes bring to elections, including some that have made it illegal to use deepfakes for election interference, keeping in mind that the 2024 election cycle is in full swing. This number is up from just three states in 2023 that had set laws to regulate AI and deepfakes in politics.

Meanwhile, the European Union is considering regulations that would require AI systems, including those used to create deepfakes, to be transparent and traceable to ensure accountability.

Global Approaches to Regulation

The global response to regulating AI and deepfake content varies. In Europe, the AI Act represents a pioneering regulatory framework that not only bans high-risk AI applications but also mandates AI systems to adhere to fundamental European values. This legislation reflects a proactive approach to governance that prioritizes human rights and transparency.

Meanwhile, China’s approach to AI regulation focuses on striking a balance between control and innovation while also addressing global AI challenges such as fairness, transparency, safety, and accountability. The nation’s regulatory framework is designed to manage the rapid advancement of AI technologies, including deepfakes, to make sure that they align with national security and public welfare standards without stifling technological progress.

These examples reflect awareness that citizens across the globe see a growing need for collaborative regulatory approaches to address the complex, cross-border nature of digital information and AI.

3 Current Technologies for Detecting a Deepfake

The development of tools to detect deepfakes has become crucial in the battle against digital misinformation. Here are some of the key tools currently employed to identify and counteract deepfakes:

Deepfake Detector Software

These tools employ advanced machine learning algorithms to detect inconsistencies typical of deepfakes. These tools analyze videos and images for anomalies in facial expressions, movement, and texture that human eyes might miss. They include Deepware Scanner, Sentinel, FakeCatcher by Intel, and Microsoft’s Video Authenticator.

AI-Powered Verification Systems

There are AI-powered systems that specifically target the detection of deepfakes used to manipulate election processes or spread misinformation. For example, TrueMedia’s AI verification system helps journalists and fact-checkers by comparing suspected media against verified databases to determine authenticity.

Audio Analysis Tools

It’s possible to analyze audio to determine the validity of video content. McAfee’s latest innovations include deepfake audio detection technology, which can accurately identify alterations in audio files, which happen to be a common component of sophisticated deepfakes. Known as “Project Mockingbird,” McAfee’s audio deepfake detector analyzes tonal inconsistencies and unnatural speech patterns to flag fake audio content.

4 Methodologies for Deepfake Detection

Some of the top deepfake detection methodologies are:

- Error-level analysis and deep learning: This methodology combines error-level analysis with deep learning techniques to identify inconsistencies within a digital image or video that suggest manipulation. The approach analyzes compression errors across digital files and applies deep learning to recognize patterns typical of deepfakes.

- Real-time deepfake detection: Some tools are designed to operate in real-time, using AI to analyze video streams and alert users to the presence of deepfakes as they are being broadcast. This is crucial for media outlets and live broadcasts where immediate verification is necessary.

- Audio deepfake detection: Focusing on the auditory aspects of deepfakes, this methodology analyzes subtle voice patterns and detects minor anomalies that may point to manipulation. It can detect an AI voice generator. It’s particularly useful in verifying the authenticity of audio files and protecting against scams that use voice imitation.

- Multimodal detection techniques: Combining multiple data sources and sensory inputs, these techniques use a holistic approach to detect deepfakes by analyzing both audio and visual elements together. This integration helps in identifying discrepancies between the two that may go unnoticed when analyzed separately.

Challenges and Limitations in Detecting Deepfakes

- Rapid technological advancements: The pace at which deepfake technology is evolving makes it difficult for detection methods to keep up. As AI models become more sophisticated, they can generate more convincing deepfakes that are harder to detect using current methods.

- Limited training data: Effective deepfake detection needs extensive datasets of fake and real content for training AI models. However, the availability of such datasets is limited, which restricts the training and effectiveness of detection algorithms.

- Detection evasion techniques: As detection methods improve, so do the techniques used to evade these measures. Deepfake creators often adapt to detection methods by altering their techniques, which yields a continuous arms race between deepfake generation and detection.

- Complexity in multimodal detection: Deepfakes can involve not just visual elements but also audio, making them multimodal. Effective detection needs to consider all aspects, which increases the complexity and resource requirements of detection systems.

5 Ways to Spot a Deepfake

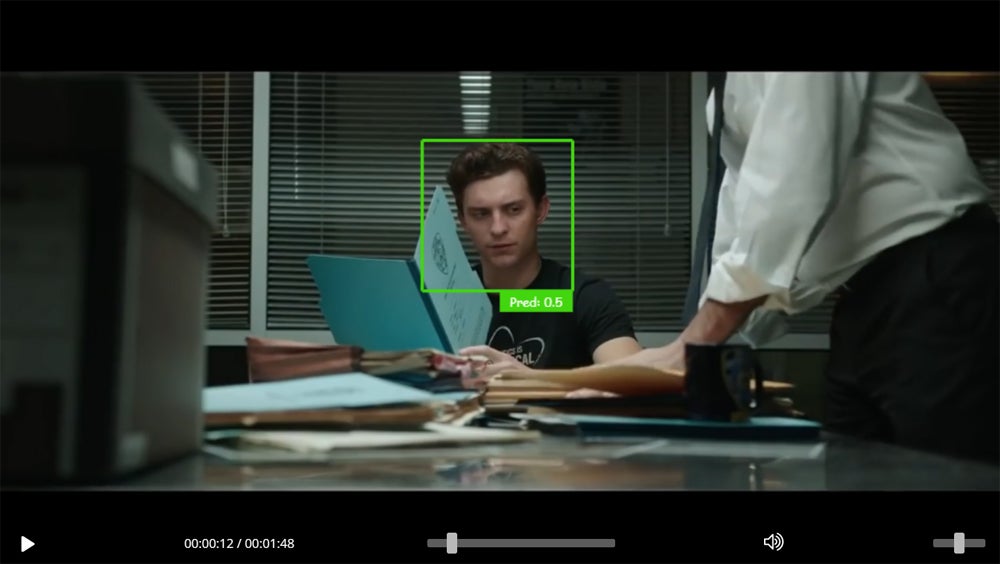

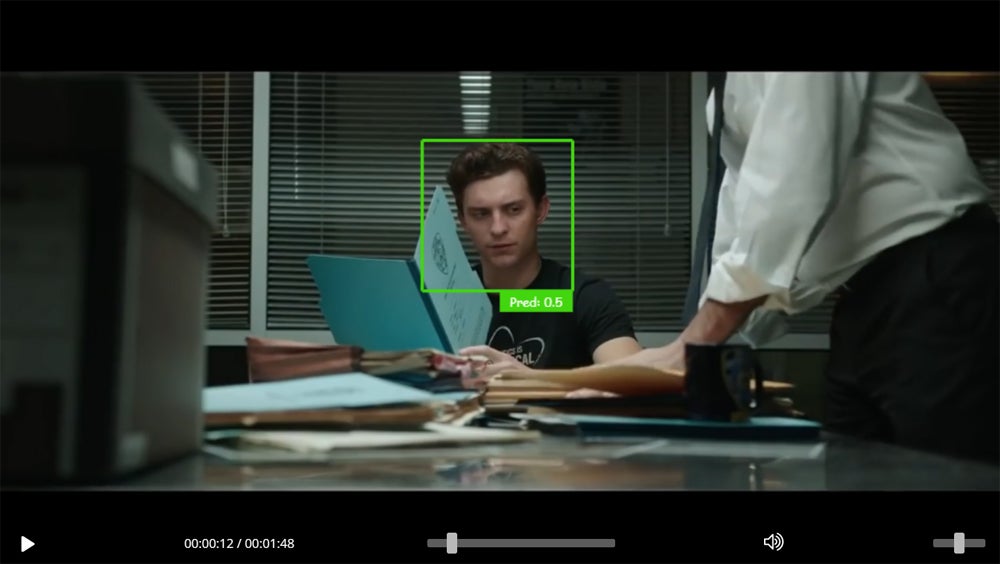

Detecting deepfakes can be challenging, but there are several indicators that can help identify them. Here are some key techniques, based on a popular deepfake video of the movie Spider-Man: No Way Home, that swaps out current Spider-Man actor Tom Holland’s face with that of former Spider-Man actor Tobey Maguire.

I used Deepware AI to test the video, which was unsurprisingly flagged as a deepfake.

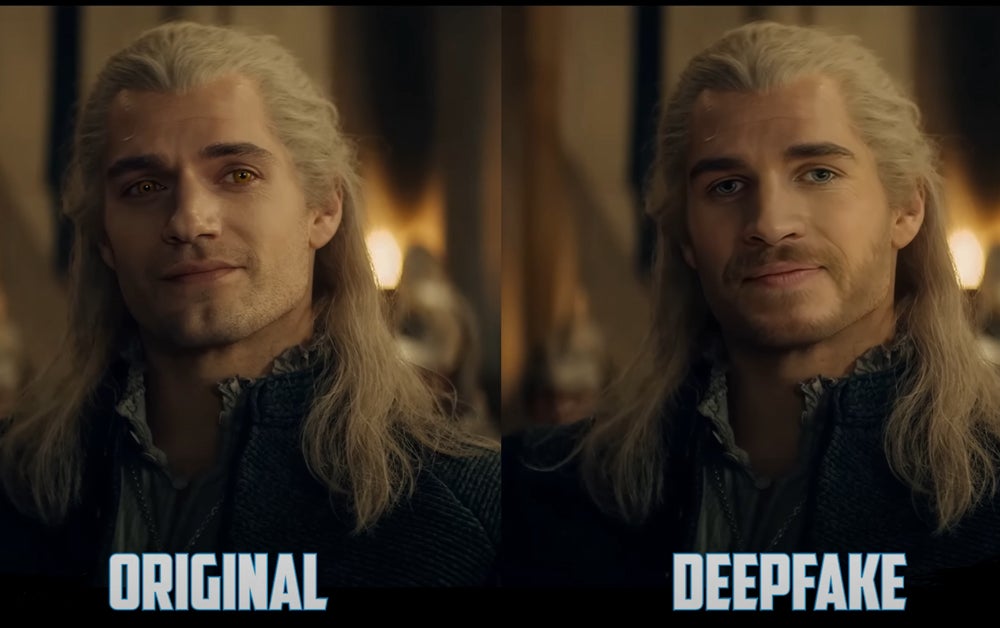

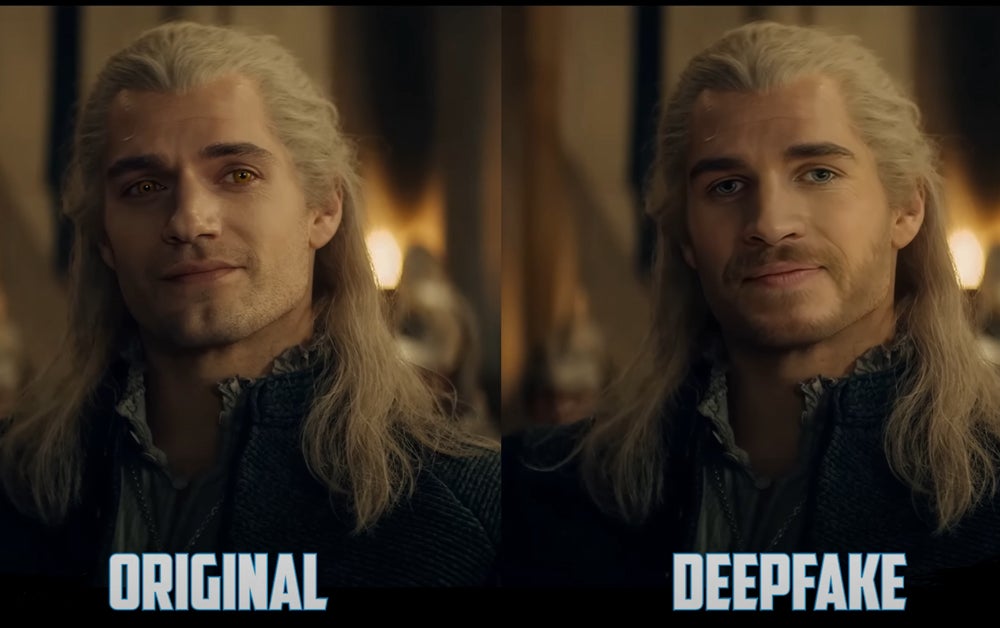

- Look at the faces: As we’ve established, the most common method used in deepfakes involves face swapping. You should closely examine the edges of the face for any irregularities or blurring that might indicate manipulation. This is often where deepfake technology struggles to seamlessly blend the altered face with the body. I found the tell in the image below to be just how unnervingly smooth the face is. Also, the chin is alarmingly asymmetric.

- Check for jagged face edges: Imperfections at the border of the face where it meets the hair and background can be telltale signs of a deepfake. These edges may appear jagged or unnaturally sharp compared to the rest of the image.

- Assess lip sync accuracy: In videos, poor lip synchronization can be a clear indicator of a deepfake. If the movements of the mouth don’t perfectly match up with the spoken words, it could scream deepfake.

- Analyze skin texture: Look for mismatches in skin texture between the face and the body. Deepfakes often fail to perfectly replicate the skin texture across different parts, which can appear inconsistent or unnatural. In this particular frame below, I found the texture of the skin to be mismatched with the scene in the video, as it made the face look computer generated.

- Observe lighting inconsistencies: Lighting and shadows in deepfakes may not align correctly with natural light sources in the scene. Inconsistencies in how light falls on the face compared to the rest of the scene can indicate a deepfake. For instance, looking at the image below closely enough, I could notice that the lighting on the face looks unnatural.

To learn about today’s top generative AI tools for the video market, see our guide: 5 Best AI Video Generators

Build Protection Against Deepfakes: Next-Step Strategies and Recommendations

Collaborative efforts among technology companies, policymakers, and researchers are key to developing more effective tools for detecting deepfakes and establishing ethical guidelines. Here are some initiatives and strategies to be aware of that may enhance protection against deepfake threats.

Forge Partnerships for Advanced Detection Technologies

To effectively combat deepfakes, you should consider partnerships that leverage the strengths of both the technology sector and academic research. Companies and universities should collaborate to innovate and refine AI algorithms capable of detecting manipulated content accurately.

Develop and Advocate for Robust Policies and Legislation

As stakeholders in a digital world, it’s imperative to participate in or initiate discussions around policy development that addresses the challenges posed by deepfakes. Work toward crafting legislation that balances the prevention of misuse with the encouragement of technological advancements. Engage with policymakers to ensure that any new laws are practical, effective, and inclusive of expert insights from the AI community.

Establish Ethical Guidelines and Standards

Take proactive steps to formulate and adhere to ethical AI guidelines that govern the use of synthetic media. This involves setting standards that ensure transparency, secure consent, and uphold accountability in the creation and distribution of deepfakes.

Look to organizations like the AI Foundation and academic institutions such as the MIT Media Lab to develop these ethical practices. Encourage widespread adoption and compliance across industries to foster responsible innovation and maintain public trust in digital content.

By adopting these strategies, you can contribute to building a resilient framework against deepfakes, ensuring AI is ethically used, and safeguarding the integrity of digital media. These actions not only help mitigate the risks associated with deepfakes, they also promote a culture of responsibility and innovation in artificial intelligence.

Bottom Line: Navigating Deepfake Technology

Deepfake technology presents transformational innovative possibilities, but it’s often overshadowed by the challenges it presents.

There’s no denying the immense potential of deepfakes to enhance areas such as entertainment, education, and even healthcare. However, the risks associated with their misuse in spreading misinformation and violating personal privacy cannot be overlooked. To ensure that the development and application of deepfake technology benefit society as a whole, we need to champion an approach involving innovation, rigorous ethical standards, and proactive regulatory measures.

For a full portrait of the AI vendors serving a wide array of business needs, read our in-depth guide: 150+ Top AI Companies 2024