Unlocking the potential of in-network computing for telecommunication workloads

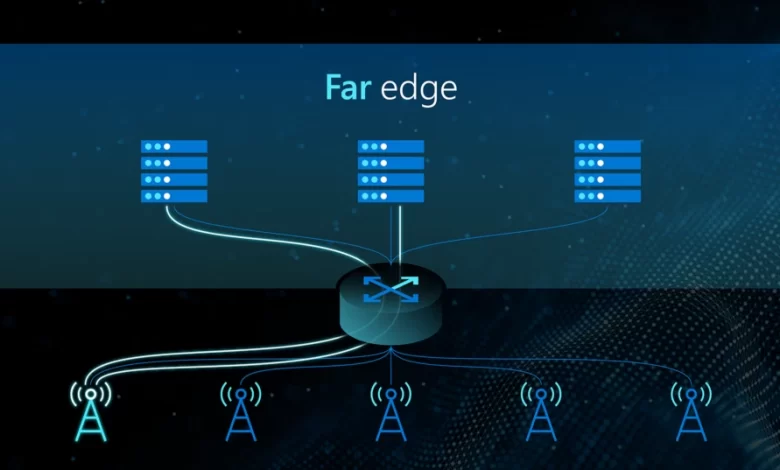

Azure Operator Nexus is the next-generation hybrid cloud platform created for communications service providers (CSP). Azure Operator Nexus deploys Network Functions (NFs) across various network settings, such as the cloud and the edge. These NFs can carry out a wide array of tasks, ranging from classic ones like layer-4 load balancers, firewalls, Network Address Translations (NATs), and 5G user-plane functions (UPF), to more advanced functions like deep packet inspection and radio access networking and analytics. Given the large volume of traffic and concurrent flows that NFs manage, their performance and scalability are vital to maintaining smooth network operations.

Until recently, network operators were presented with two distinct options when it comes to implementing these critical NFs. One, utilize standalone hardware middlebox appliances, and two use network function virtualization (NFV) to implement them on a cluster of commodity CPU servers.

The decision between these options hinges on a myriad of factors—including each option’s performance, memory capacity, cost, and energy efficiency—which must all be weighed against their specific workloads and operating conditions such as traffic rate, and the number of concurrent flows that NF instances must be able to handle.

Our analysis shows that the CPU server-based approach typically outshines proprietary middleboxes in terms of cost efficiency, scalability, and flexibility. This is an effective strategy to use when traffic volume is relatively light, as it can comfortably handle loads that are less than hundreds of Gbps. However, as traffic volume swells, the strategy begins to falter, and more CPU cores are required to be dedicated solely to network functions.

In-network computing: A new paradigm

At Microsoft, we have been working on an innovative approach, which has piqued the interest of both industry personnel and the academic world—namely, deploying NFs on programmable switches and network interface cards (NIC). This shift has been made possible by significant advancements in high-performance programmable network devices, as well as the evolution of data plane programming languages such as Programming Protocol-Independent (P4) and Network Programming Language (NPL). For example, programmable switching Application-Specific Integrated Circuits (ASIC) offer a degree of data plane programmability while still ensuring robust packet processing rates—up to tens of Tbps, or a few billion packets per second. Similarly, programmable Network Interface Cards (NIC), or “smart NICs,” equipped with Network Processing Units (NPU) or Field Programmable Gate Arrays (FPGA), present a similar opportunity. Essentially, these advancements turn the data planes of these devices into programmable platforms.

This technological progress has ushered in a new computing paradigm called in-network computing. This allows us to run a range of functionalities that were previously the work of CPU servers or proprietary hardware devices, directly on network data plane devices. This includes not only NFs but also components from other distributed systems. With in-network computing, network engineers can implement various NFs on programmable switches or NICs, enabling the handling of large volumes of traffic (e.g., > 10 Tbps) in a cost-efficient manner (e.g., one programmable switch versus tens of servers), without needing to dedicate CPU cores specifically to network functions.

Current limitations on in-network computing

Despite the attractive potential of in-network computing, its full realization in practical deployments in the cloud and at the edge remains elusive. The key challenge here has been effectively handling the demanding workloads from stateful applications on a programmable data plane device. The current approach, while adequate for running a single program with fixed, small-sized workloads, significantly restricts the broader potential of in-network computing.

A considerable gap exists between the evolving needs of network operators and application developers and the current, somewhat limited, view of in-network computing, primarily due to a lack of resource elasticity. As the number of potential concurrent in-network applications grows and the volume of traffic that requires processing swells, the model is strained. At present, a single program can operate on a single device under stringent resource constraints, like tens of MB of SRAM on a programmable switch. Expanding these constraints typically necessitates significant hardware modifications, meaning when an application’s workload demands surpass the constrained resource capacity of a single device, the application fails to operate. In turn, this limitation hampers the wider adoption and optimization of in-network computing.

Bringing resource elasticity to in-network computing

In response to the fundamental challenge of resource constraints with in-network computing, we’ve embarked on a journey to enable resource elasticity. Our primary focus lies on in-switch applications—those running on programmable switches—which currently grapple with the strictest resource and capability limitations among today’s programmable data plane devices. Instead of proposing hardware-intensive solutions like enhancing switch ASICs or creating hyper-optimized applications, we’re exploring a more pragmatic alternative: an on-rack resource augmentation architecture.

In this model, we envision a deployment that integrates a programmable switch with other data-plane devices, such as smart NICs and software switches running on CPU servers, all connected on the same rack. The external devices offer an affordable and incremental path to scale the effective capacity of a programmable network in order to meet future workload demands. This approach offers an intriguing and feasible solution to the current limitations of in-network computing.

In 2020, we presented a novel system architecture, called the Table Extension Architecture (TEA), at the ACM SIGCOMM conference.1 TEA innovatively provides elastic memory through a high-performance virtual memory abstraction. This allows top-of-rack (ToR) programmable switches to handle NFs with a large state in tables, such as one million per-flow table entries. These can demand several hundreds of megabytes of memory space, an amount typically unavailable on switches. The ingenious innovation behind TEA lies in its ability to allow switches to access unused DRAM on CPU servers within the same rack in a cost-efficient and scalable way. This is achieved through the clever use of Remote Direct Memory Access (RDMA) technology, offering only high-level Application Programming Interfaces (APIs) to application developers while concealing complexities.

Our evaluations with various NFs demonstrate that TEA can deliver low and predictable latency together with scalable throughput for table lookups, all without ever involving the servers’ CPUs. This innovative architecture has drawn considerable attention from members of both academia and industry and has found its application in various use cases that include network telemetry and 5G user-plane functions.

In April, we introduced ExoPlane at the USENIX Symposium on Networked Systems Design and Implementation (NSDI).2 ExoPlane is an operating system specifically designed for on-rack switch resource augmentation to support multiple concurrent applications.

The design of ExoPlane incorporates a practical runtime operating model and state abstraction to tackle the challenge of effectively managing application states across multiple devices with minimal performance and resource overheads. The operating system consists of two main components: the planner, and the runtime environment. The planner accepts multiple programs, written for a switch with minimal or no modifications, and optimally allocates resources to each application based on inputs from network operators and developers. The ExoPlane runtime environment then executes workloads across the switch and external devices, efficiently managing state, balancing loads across devices, and handling device failures. Our evaluation highlights that ExoPlane provides low latency, scalable throughput, and fast failover while maintaining a minimal resource footprint and requiring few or no modifications to applications.

Looking ahead: The future of in-network computing

As we continue to explore the frontiers of in-network computing, we see a future rife with possibilities, exciting research directions, and new deployments in production environments. Our present efforts with TEA and ExoPlane have shown us what’s possible with on-rack resource augmentation and elastic in-network computing. We believe that they can be a practical basis for enabling in-network computing for future applications, telecommunication workloads, and emerging data plane hardware. As always, the ever-evolving landscape of networked systems will continue to present new challenges and opportunities. At Microsoft we are aggressively investigating, inventing, and lighting up such technology advancements through infrastructure enhancements. In-network computing frees up CPU cores resulting in reduced cost, increased scale, and enhanced functionality that telecom operators can benefit from, through our innovative products such as Azure Operator Nexus.

References