Using ECG AI to find the cardiac amyloidosis needles in the haystack

Early detection of cardiac amyloidosis is paramount for improved outcomes, since there are no therapies to remove amyloid that has already deposited in the heart. The disease is considered relatively rare, but it is also widely acknowledged to be under diagnosed. Since many centers do not have a lot of experience with amyloid cases and most do not have dedicated amyloidosis experts, researchers are looking at ways artificial intelligence (AI) might make it easier to identify these patients.

With new drugs recently made available to treat cardiac amyloidosis, there has been an explosion of interest to improve diagnosis to find and treat patients. The drugs can help stabilize the disease, but not reverse it, so early diagnosis is crucial when the disease is at its most treatable stage. Signs of amyloidosis are often found during echocardiograms, but early disease is subtle and can be easily missed.

Symptoms of amyloidosis are also often similar to those of more common cardiac conditions, so it is frequently not recognized until it has progressed to advanced stages. AI is expected to soon play a bigger role in better detecting and classifying the disease, and is being developed for both imaging and more easily accessible ECG.

AI for ECG detection of amyloidosis

Research in the latest issue of JACC: Advances showed even an AI model that is not ideal could be used to train a 12-lead ECG algorithm to detect cardiac amyloidosis with an 89% level of accuracy. [1]

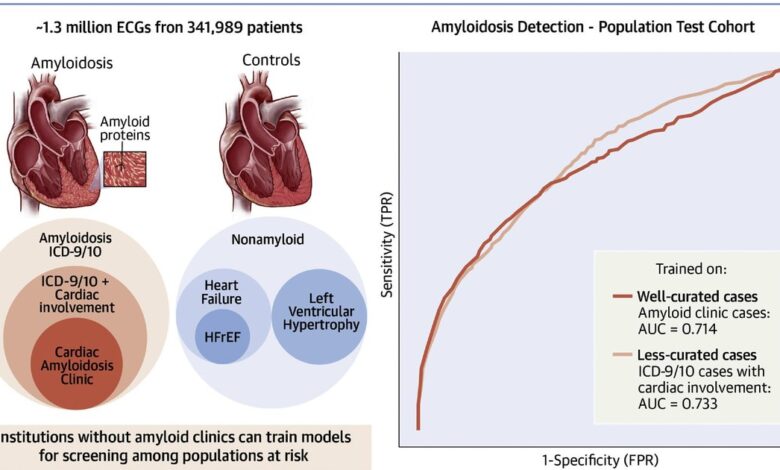

The single center study evaluated the performance of electrocardiogram waveform-based AI models for cardiac amyloidosis screening and assessed the impact of different criteria for defining cases and controls. The AI models looked through 1.3 million ECGs from 341,989 patients and were then tested on cohorts with identical selection criteria, as well as a Cedars-Sinai general patient population cohort.

The researchers said it is difficult to find well-curated cohorts of rare diseases to test algorithms designed to detect. They said that was the reason they created this study, to see if less well-curated cohorts can effectively train AI.

The models performed similarly in population screening, regardless of stringency of cases used during training, showing that institutions without dedicated amyloid clinics can train meaningful models on less curated cardiac amyloid cases. The researched also found that area under the receiver operating curve (AU-AUC) or other metrics alone are insufficient in evaluating deep learning algorithm performance. Instead, they said evaluation is really needed in the most clinically meaningful population.

For this study, the AI models were provided ECGs from patients with cardiac amyloidosis, plus the same type of data from controls without the disease. The model then learned on its own to look at ECG patterns associated with cardiac amyloidosis to identify cases on ECGs it had not seen before.

Researchers used combinations of cases and controls and found wide variability in accuracy with AU-ROCs ranging from 0.467 to 0.898 depending on the selected model and test set.

“Several trends emerged from this analysis. First, good performance in a test set mirroring the training/development set does not guarantee good performance in a broader population. Second, incorporating a broad set of controls is critical. None of the models developed with narrow control definitions (such as restricting to heart failure or left ventricular hypertrophy) were viable. Such a strategy could be reasonably motivated by forcing the model to learn to make difficult distinctions in a clinical diagnostic context, but such cases should still be part of a broader population if that is the intended use of the resulting model,” wrote Timothy J. Poterucha, MD, assistant professor of medicine in the Division of Cardiology at Columbia University Irving Medical Center, in an editorial about the study. [2]

Lessons on AI accuracy and possible shortcuts AI may take

The study authors tested a variety of combinations of factors in different AI algorithms to see which worked best. They said this turned out to be important, because they found some combinations cause the AI to create shortcuts to assess patients that were not always accurate.

“We caution readers that choosing training cohorts for AI models mirrors designing a case-control study. Small choices in selection criteria can significantly impact model generalizability, as models can identify shortcut variables and confounding influences,” the researchers wrote on their conclusion.

They gave one example of how algorithms took different routes in their analysis based on the factors they were asked to look at. Age and sex matching led to a model that outperformed one trained on unmatched cases and controls. They said this is likely because matching cases and controls on age and sex forced the model to learn other features outside of age and sex. Age and sex could be confounders that a model was learning to use as proxies to differentiate cases and controls.

Another example they gave pertained to an algorithm that matched on age, sex and QRS amplitude, which generalized poorly. Researchers said this makes sense as low ECG amplitude is a hallmark feature of amyloidosis. Elimination of any difference in QRS amplitude between cases and controls would force the AI model to rely on other features to distinguish cases and controls which are more rare and may not be seen in all cases when testing on a population level.

“The authors are to be congratulated for carrying out this complex, rigorous analysis. The finding that using similar case and control definitions as the planned deployment is likely a generalizable one in AI research, and this manuscript continues to build the evidence basis for using AI to detect cardiac amyloidosis. The next frontier is clear: we must move beyond the retrospective and carry out the essential clinical trials to determine if we can diagnose cardiac amyloidosis with these technologies,” Poterucha concluded in the editorial about this study.