Vecow Embedded Computer Solves Time Synchronization Challenges in Low-Speed Electric Vehicles

May 22, 2024

Blog

Compared to their outdoor counterparts, indoor mobile robots are relatively easy to design, as they generally don’t have to worry various weather elements, difficult flooring that needs to be traversed, or unexpected obstructions. The indoor robot is designed to navigate and operate in places like homes, offices, warehouses, hospitals, or factories. These robots use a combination of sensors, software, and sometimes artificial intelligence to understand and interact with their surroundings.

Outdoor mobile robots can be quite useful, as we find ourselves with a global labor shortage, particularly in areas like agriculture, manufacturing and various service industries. For example, self-driving low-speed vehicles can reduce labor burdens by operating seamlessly around the clock. Sometimes called neighborhood electric vehicles (NEVs), they can serve is areas like airports, malls, and amusement parks.

Low-speed electric vehicles reduce the environmental impact compared to gas-powered counterparts, aligning with many corporations’ ESG goals. According to one industry analyst, the low-speed electric vehicle market is projected to reach $8.8 billion by 2029, with a CAGR of 8.6%.

- Mobility, often using wheels, tracks, or legs designed to handle rough conditions.

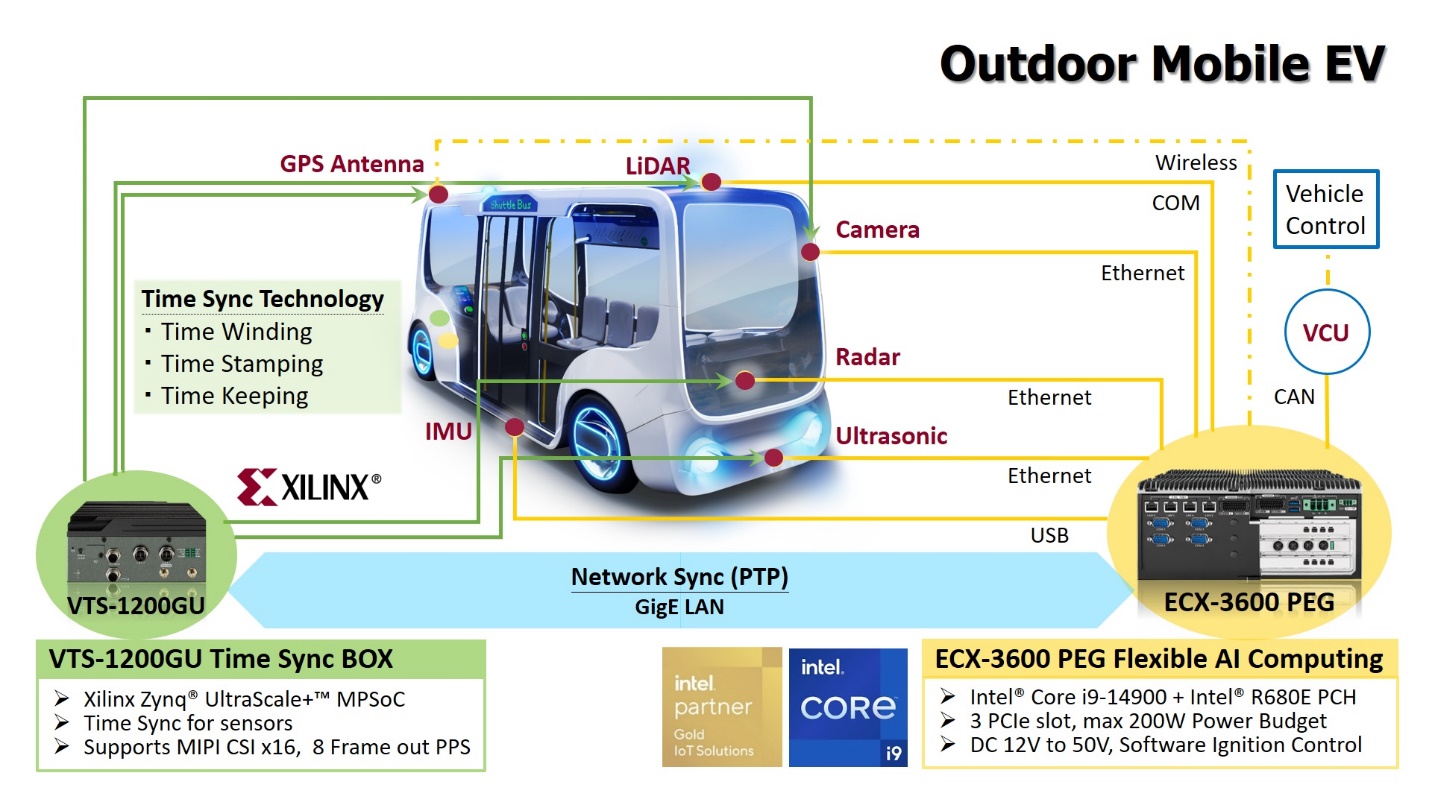

- Navigation, using various sensors, like GPS, cameras, LiDAR, etc. and others to navigate complex environments. Advanced algorithms like simultaneous localization and mapping (SLAM) can be used to maintain accurate positioning.

- Autonomy, meaning that the robots can operate with a high degree of independence, allowing them to execute tasks without constant human supervision. They can be programmed for specific routes or tasks, adjusting to changing conditions.

- Connectivity is typically handled through wireless networks, enabling remote monitoring, control, and data transmission.

As stated, the outdoor mobile robot pulls in data from a slew of sensors, for various reasons. That could be to monitor its own movement (seeing when objects are in the way, when the terrain is changing, etc.) or it could be to monitor its surroundings, as part of its primary functions. In other words, the sensors help it navigate, perceive, and interact with its environment, all without human intervention.

The sensors could be a global positioning system (GPS), to obtain geographic location or inertial measurement units (IMUs), which measure motion and orientation through accelerometers, gyroscopes, and sometimes magnetometers.

LiDAR (light detection and ranging) sensors use laser beams to create high-resolution maps of a surrounding environment, useful for obstacle detection. Similarly, ultrasonic sensors use sound waves to detect nearby objects or obstacles, typically just for short-range proximity sensing. For longer-range object detection, some outdoor robots employ radar, particularly in environments with low visibility.

Simple (and inexpensive) infrared or capacitive sensors can detect the proximity of objects, aiding in obstacle avoidance. Those would be coupled with environmental sensors, which measure external conditions like temperature, humidity, air quality, or weather data, aiding robots that operate in agricultural or environmental monitoring applications. RGB cameras can be used to capture visual information, enabling tasks like object recognition, visual mapping, and navigation. If needed, specialized stereo or depth cameras can be used.

Combining the ECX-3600 PEG with the VTS-1200GU time-sync box results in a high-performance embedded computer that suits outdoor mobile-robot applications.

Combining that data transmitted by some (or all) of these sensors helps create a comprehensive understanding of the environment and enables the most efficient operation. Known as sensor fusion, the technique helps mitigate the limitations of individual sensors and provides a more comprehensive view of the environment. It adds some level of redundancy, as some of the results would overlap. For example, the cameras and LiDAR might provide similar—corroborating— information, raising the confidence level for truly safe, efficient, autonomous operation.

Vecow provides a next-generation computing platform that’s up to the task of driving an outdoor mobile robot. The ECX-3600 PEG is powered by a combination of an Intel Core i7-13700E, an NVIDIA Tesla T4, or a GeForce RTX 4060 graphics card.

The rugged VTS-1200GU is powered by a Xilinx Zynq UltraScale+ MPSoC, and offers 16 Gbytes of RAM, as well as a host of I/O. It suits outdoor mobile-robot applications thanks to its ability to operate in a wide temperature range, from -40°C to +75°C.

The vast computing resources help the platform support the required AI activity that’s generated by the application. Those resources also include a power budget as high as 200 W, with a DC input ranging from 12 to 50 V. From an I/O perspective, the ECX-3600 PEG offers USB 3.2 Gen 2×2 with a maximum data-transfer bandwidth of 20 Gbits/s, four independent 2.5-Gbit/s IEEE 802.3 interfaces (with 2-Gbit/s Ethernet LAN), and multiple expansion options using two PCIe by 16 slots, one PCIe by 8 slot, and one Mini PCIe. Security is handled through TPM 2.0, with storage coming via four front-access 2.5-in. SSD trays.

Note that Vecow can provide a customized thermal solution with this platform. The company would ensure that the installation position and configuration of both the embedded system and the graphics card are optimized to facilitate maximum airflow, aiding in heat dissipation. In some cases, this would necessitate redesigning or rearranging the layout of system components to achieve better cooling efficiency.

Some of the issues that can be resolved thanks to the ECX-3600 PEG include a simple upgrade path, easy integration of whatever sensors are needed, and adherence to the highest levels of safety and reliability. These qualities are all hallmarks of the Vecow design, integration, and manufacturing process.

A second component that can play a big role in this application is the Vecow VTS-1000 Time Sync Box, which is based on a Xilinx Ultrascale+ MPSoC and running a Linux operating system around an ROS 2 Humble framework. Other features of the platform include an eight-channel sync output for connection to cameras, LiDAR, radars, and an IMU. Network connectivity comes through 1-GigE LAN, one network-sync LAN with PTP/gPTP support, an external GNSS input, and a daisy-chain sync output. DC input voltage range is 5 to 60 V, and the operating temperature sits at -40°C to +75°C.

Note that the VTS-1000 can support 90% of the sensors currently available in the market. It also supports time-winding, time-stamping, and time-keeping technologies. In addition, by daisy chaining, the platform can support unlimited sensors for time sync. See the white paper Requirements for Outdoor Mobile Robots Include Computer Vision and Time Synchronization for more information on time sync.

Vecow can make these claims with the highest levels of confidence because they’ve already produced such a system for a customer based in Taiwan, who specializes in the development of software for autonomous vehicles. That customer’s primary focus is on low-speed transportation vehicles, which are prominently featured in an amusement park for shuttle services and in golf carts across Taiwan.

To reiterate, the key considerations for collaborating with Vecow on an application like this one include a high amount of flexibility and customization, coupled with advanced cooling technology, as well as years of engineering experience working in al corners of the globe. Contact the company for more information.