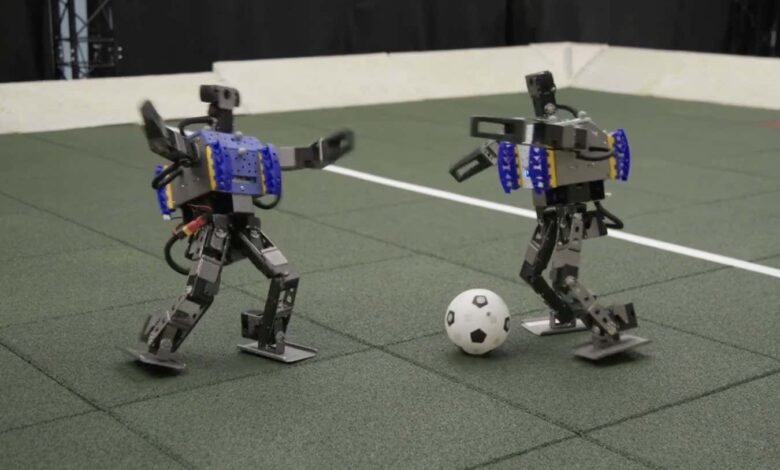

Watch mini humanoid robots showing off their football skills

Footballing robots have had an upgrade. Two-legged robots trained using deep reinforcement learning, which is driven by artificial intelligence, can walk, turn to kick a ball and get up after falling faster than robots working from scripted lessons.

Guy Lever at Google DeepMind and his colleagues put battery-powered Robotis OP3 robots, which are about 50 centimetres tall and have 20 joints, through 240 hours of deep reinforcement learning.

This technique combines two key tenets of AI training: reinforcement learning sees agents gaining skills through trial and error, with a target of being rewarded for choosing correctly more often than choosing wrongly, while deep learning uses layers of neural networks – attempts to mimic the human brain – to analyse patterns within the data the AI is shown.

The researchers compared their bots against robots working from pre-scripted skills. The ones trained with deep reinforcement learning could walk 181 per cent faster, turn 302 per cent quicker, kick a ball 34 per cent harder and get up 63 per cent faster after falling in a one-versus-one game than the others. “These behaviours are very difficult to manually design and script,” says Lever.

The research advances the field of robotics, says Jonathan Aitken at the University of Sheffield, UK. “One of the most significant problems dealt with in this paper is closing the sim-to-real gap,” he says. This is where skills learned in simulations don’t necessarily transfer well to real-life environments.

The solution proposed by the Google DeepMind team – to use a physics engine to simulate training cases rather than have the robot repeatedly try things in real life and use that as training data analysed by the neural network – is a useful one, he says.

But “soccer-playing robots is not the end goal”, says team member Tuomas Haarnoja.

“The aim of this work isn’t to produce humanoid robots playing in the Premier League any time soon,” says Aitken, “but rather to understand how we can build complex robot skills quickly, using synthetic training methodologies to build skills that can be rapidly, and more importantly robustly, transferred to real work applications.”

Topics: