Will society become colder if robots help the sick and elderly?

As the population ages and people live longer, there will likely be an increased need for labour in the healthcare sector.

Robots and

various types of technology could help relieve workers in the health and care

sector.

Eventually, it might be possible to use social robots that can interact with people, talk, or perform tasks in nursing homes or in private homes.

Will these robots be beneficial, or could they lead to problems?

What is being replaced?

There is a potential for robots to make society colder, says Henrik Skaug Sætra.

He is an

associate professor at the University of Oslo’s Department of Informatics and

works with philosophy and ethics related to technology.

“The question

is what kind of human work we’re replacing. If we replace the caregiving aspect of human

contact, it becomes much more problematic,” he says.

Sætra thinks

that support robots that can help with heavy lifting or fetching things could

be positive.

“But if we’re

talking about social robots, there’s a possibility that it could become tempting to partially replace human contact with this type of robot,” he says.

Robots that keep you company

Experiments

have been conducted with Paro, a social robot seal used in nursing homes for

people with dementia.

These experiments

have shown that this is something the residents enjoy, according to Sætra.

“Residents actually

become happier, more social, and score better on physical and mental health

indicators,” he says.

“It could

become tempting to allow this type of robot to partially replace human contact. A human might provide greater benefits, but they’re a much

more expensive option.”

With an ageing population, an increasing number of dementia cases, and a shortage of healthcare workers, some tough choices need to be made.

“However, if we replace personal caregiving interactions, it could indeed lead to a colder society in some respects,” he says.

We must consider the alternatives

Atle Ottesen

Søvik is a professor at the MF Norwegian School of Theology, Religion and Society with

a focus on the philosophy of religion.

He points out

that we must consider what the care alternatives are.

If the

alternative is that the elderly receive little or poor care due to limited human

capacity, it might be better for robots to assist.

However, he

believes it can be easy to prioritise wrongly. The sense of care and dignity might be lost if too much is automated.

“The importance

of the care dimension should not be underestimated. Research shows that you can endure a lot if you feel valued. If you feel like a nuisance and

a burden, life can feel very painful,” Søvik says.

While robots might be a cost-effective solution, Søvik argues that we should not focus solely on the numbers. Other values must also be considered, such as recognition,

dignity, and community.

Robots can give the impression of having empathy

But can robots

also provide a sense of care and recognition?

This is where

the research results diverge, says Søvik.

“Some people want

to feel empathy from another person, while others question how genuine that empathy really is. Is the person just pretending to care without actually being interested?”

In a study reported

by The Guardian, researchers compared responses from doctors and ChatGPT to

medical questions. ChatGPT scored higher for both empathy and the quality of

the advice it provided.

Some people might

be satisfied with a companion robot they can talk to.

“You might feel that there’s no judgment. I don’t feel like a burden, the robot has plenty of

time,” he says.

Others find that companion robots don’t work for them.

Not yet widespread

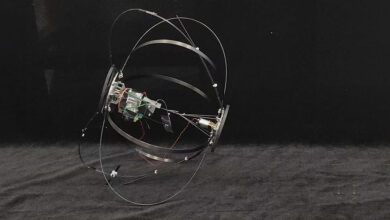

So far, social

assistance robots have not taken become widespread. Pepper is one of the robots that has been

created to provide entertainment and companionship in nursing homes.

The robot was

developed in 2014. Few places have adopted it, according

to The Atlantic. Production of the robot was paused in 2020 due to a

lack of demand.

Pepper does not

seem to have relieved caregivers. Instead, time has been spent manoeuvring

it, according to the article.

Can you befriend a robot?

Let’s say it

becomes possible to have a social robot at home. Could this lead to

people becoming more isolated, with their social needs artificially met by

a robot? Or could a relationship with a robot be good enough?

“You might

immediately think that of course a robot can’t be your friend. But it’s more debatable than you might think,” says Søvik.

Humans can form relationships with robots and feel acknowledged as the

robot learns how to get to know you.

“We can feel a

sense of recognition that both the robot and I are distinct individuals, who are

vulnerable and can break. There are many social dimensions that are

interesting to explore,” he says.

But there are

differences between the relationship with a robot and a relationship with other

humans. One of them is that people spend a long time getting to know each other

and build a relationship. Søvik points out that a robot can ‘like’ everyone equally.

“This is where

I think there’s a value in human weakness, which binds us together and enables us

to experience a kind of bond that we can’t have with robots,” he says.

Another aspect is that a good friend can challenge you.

“The machine is

often designed just to tell you that you’re great and might not provide the challenges and resistnce you need,” he says.

Robots as slaves

Should assistance

robots instead be designed in such a way that prevents people from forming attachments to them too easily?

Henrik Skaug Sætra thinks this might be appropriate.

“We form attachments to all types of technology, even very simple technology. The more human-like

the technology is, and the more it pretends to have emotions and human characteristics,

the stronger the attachment becomes,” hesays.

Replika is a

service where people can create an AI companion to chat with.

“We’ve seen

that quite a few people form strong bonds with AI programs like Replika. It’s

designed to be a romantic partner, and people actually do fall in love with it,” he says.

If human-like

robots are given the same ability to hold conversations as human beings, there is a danger that people might prefer relationships with these robots, according to Sætra.

“Some think that we should build robots that are ‘slaves’. The use of that term

is controversial, but the point is interesting,” he says.

Not home alone

Some experts

believe that service robots should simply do their job and bear little

resemblance to humans.

“Making them as

simple and dumb as possible can be good for both parties. This way, we won’t face a situation where these robots need to be considered moral beings that we’re responsible for and can’t just treat any way we want,” says Sætra.

Others believe

that making robots as human-like as possible is beneficial and will make

communication more efficient.

Another aspect

of home robots that can be problematic is the concern for privacy, says Marija

Slavkovik.

She heads the

Department of Information Science and Media Studies at the University of Bergen

and studies artificial intelligence and ethics.

“Humanoid

robots, like the ones being planned, have a lot of sensors,” she says.

Sometimes we

behave differently when other people are around. At home, we behave as if we are alone, even though we have technology like washing machines and kitchen appliances.

“If the

machines have sensors controlled by other people, then we aren’t

truly alone anymore even when we’re alone,” says Slavkovik.

“This isn’t

necessarily problematic if the legal and social framework is well planned and

the situation is well understood by everyone.”

Are robots sustainable?

Does it make

sense to work towards creating advanced humanoid robots at a time when we need

to reduce consumption and emissions?

If this were

cheap technology that could easily be ordered online, it would be problematic,

says Sætra. That won’t happen anytime soon.

“If we manage

to limit humanoid robots to sensible uses, then I think it can offset the material usage,” he says.

Sensible uses

would be to assign robots to perform dangerous, dirty, and boring tasks, according to Sætra.

“If we think

everyone should have this type of robot just for fun, the issue of

consumption would clearly be relevant,” he says.

Sætra thinks

there is currently more cause for concern about online services that are based

on artificial intelligence, like ChatGPT.

These services

require a lot of energy and large data centres and are often used just

for fun.

———

Translated by Ingrid P. Nuse

Read the Norwegian version of this article on forskning.no