Workplace platform Slack clarifies data policy on AI after online criticism

The workplace communication platform Slack clarified how it uses customer data this month after social media users alleged it had an obscure policy.

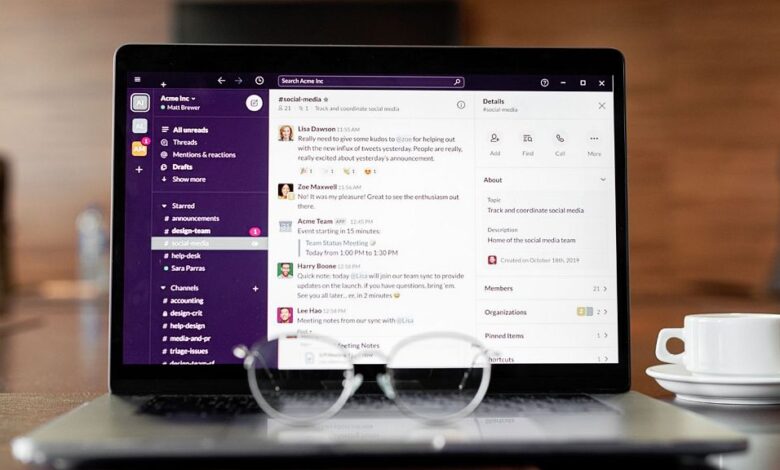

Slack is a popular cloud-based software owned by the US tech giant Salesforce.

Its use rose during the COVID-19 pandemic as many people began remote working.

Criticism against Slack this month began when a user posted his surprise regarding Slack’s privacy principles which state that “systems analyse customer data (e.g. messages, content, and files) submitted to Slack as well as other information (including usage information)” to develop non-generative artificial intelligence (AI) and machine learning.

The company replied on the social media platform X (formerly known as Twitter) that the models weren’t built “in such a way that they could learn, memorise, or be able to reproduce some part of customer data”.

The company also added that the data wasn’t being used to train third-party large language models (LLMs).

Slack does, however, use machine learning for some features such as summarising information from the channels a user follows.

Slack responded to criticism with a blog post published earlier this month.

“We recently heard from some in the Slack community that our published privacy principles weren’t clear enough and could create misunderstandings about how we use customer data in Slack,” the company said.

“We value the feedback, and as we looked at the language on our website, we realised that they were right. We could have done a better job of explaining our approach, especially regarding the differences in how data is used for traditional machine learning (ML) models and in generative AI”.

Contacted by Euronews Next, the company gave a few examples of customer data that could be used such as the timestamp of the last message sent in a channel, the number of interactions between two users, and the number of overlapping words between channel names to indicate the relevance to a user.

Though the guidelines didn’t change, “the wording of the confidentiality principles has been updated” to clarify practices and policies, a spokesperson added.

Concerns about artificial intelligence and privacy

In the blog post, the company added that Slack’s “traditional ML models use de-identified, aggregate data and do not access message content in DMs, private channels or public channels”.

The company also specified that it’s possible to opt out of having your data used by having an IT administrator send an email to customer service using a specific subject line.

This opt-in-by-default approach is one of the aspects of their policy that has been criticised.

While AI can automate tasks and boost efficiency, concerns are mounting over data privacy, especially when it comes to large language models.

Some tech companies, such as Google or Amazon, have reportedly warned their employees not to enter confidential company information into generative AI tools, for instance.